AI Therapy And The Surveillance State: Risks And Realities

Table of Contents

Data Privacy and Security Concerns in AI Therapy

The allure of AI therapy lies in its accessibility and potential for personalized treatment. However, this convenience comes at a cost: our most intimate thoughts and feelings become digital data.

The Nature of Data Collected

AI therapy platforms collect vast amounts of sensitive personal information, including:

- Detailed mental health histories, including diagnoses and treatment plans.

- Personal relationships, family dynamics, and social interactions.

- Financial information, used for billing and insurance purposes.

- Location data, potentially revealing sensitive information about the user's whereabouts.

This data is incredibly vulnerable.

- Data breaches are a constant threat, potentially exposing highly sensitive personal information to malicious actors.

- Current data protection regulations are often inadequate to address the unique challenges posed by AI therapy. Existing HIPAA regulations, for example, may not fully encompass the complexities of AI data handling.

- Tech companies may aggregate and profile this data, creating detailed psychological profiles that could be used for targeted advertising or other purposes, raising serious ethical questions.

Consider recent breaches in other healthcare sectors; the consequences of similar breaches in the context of AI therapy could be devastating, potentially leading to identity theft, financial loss, and irreparable damage to reputation and well-being.

Algorithmic Bias and Discrimination in AI Therapy

AI algorithms are only as good as the data they are trained on. Unfortunately, this data often reflects existing societal biases.

The Problem of Biased Algorithms

Bias in training data can lead to discriminatory outcomes in AI therapy, disproportionately impacting certain demographic groups.

- AI systems may perpetuate existing societal biases related to race, gender, socioeconomic status, sexual orientation, and other factors.

- Algorithmic bias can lead to misdiagnosis, inadequate treatment plans, and even the exacerbation of pre-existing mental health conditions.

- The lack of transparency and accountability in algorithmic decision-making makes it difficult to identify and address these biases effectively.

Examples of algorithmic bias in other AI applications, like facial recognition and loan applications, illustrate the potential for serious harm. In the context of mental healthcare, such biases can have life-altering consequences.

The Erosion of Therapist-Patient Confidentiality

The cornerstone of effective therapy is trust and confidentiality. AI therapy, however, presents a significant challenge to this fundamental principle.

Data Sharing and Third-Party Access

AI therapy platforms may share data with various third parties, including:

- Insurance companies, for billing and coverage purposes.

- Employers, potentially impacting employment opportunities.

- Law enforcement agencies, raising concerns about involuntary disclosure.

This data sharing erodes the traditional therapeutic relationship:

- The legal and ethical implications of data sharing, particularly concerning informed consent, are complex and often unclear.

- The knowledge that sensitive personal information may be shared with third parties can undermine the trust necessary for effective therapy.

- Patient data could be used against their interests, for example, in employment decisions or legal proceedings.

The way data from other digital platforms has been leveraged unexpectedly – think targeted advertising or political manipulation – should serve as a cautionary tale regarding the potential misuse of information gathered within the AI therapy context.

The Potential for Manipulation and Control

The ability of AI to track, analyze, and predict human behavior raises concerns about potential manipulation and control.

Behavioral Modification and Surveillance

AI therapy could be used for behavioral modification and surveillance purposes:

- AI algorithms could track and monitor patient behavior, potentially leading to unintended consequences and a chilling effect on open communication.

- The potential exists for AI to influence patient choices and actions, raising questions about autonomy and free will.

- Constant monitoring and judgment could create a climate of fear and anxiety, further damaging mental well-being.

Examples of surveillance technologies and their impact on individual autonomy highlight the potential dangers of such applications in the context of mental healthcare.

Conclusion

The integration of AI into mental healthcare presents both opportunities and significant challenges. AI Therapy and the Surveillance State are inextricably linked. The risks associated with data privacy breaches, algorithmic bias, erosion of confidentiality, and the potential for manipulation are substantial. Addressing these issues requires a multi-faceted approach:

We must advocate for stricter data protection regulations specifically tailored to AI therapy, demand greater transparency and accountability in the development and deployment of these platforms, and prioritize ethical considerations above technological advancements. We must engage in critical discussions about the ethical implications of AI in mental health and promote responsible innovation. Learn more about AI therapy and the surveillance state and become involved in shaping its future responsibly. Only through careful consideration and proactive action can we harness the potential benefits of AI in mental healthcare while safeguarding individual rights and liberties.

Featured Posts

-

Stocks Surged Sensex Rises Top Bse Stocks Up Over 10

May 15, 2025

Stocks Surged Sensex Rises Top Bse Stocks Up Over 10

May 15, 2025 -

Padres Beat Athletics Clinch 10 Wins First In Mlb

May 15, 2025

Padres Beat Athletics Clinch 10 Wins First In Mlb

May 15, 2025 -

Everton Vina Coquimbo Unido Reporte Del Partido 0 0

May 15, 2025

Everton Vina Coquimbo Unido Reporte Del Partido 0 0

May 15, 2025 -

Lietuviai Neinvestuoti I Boston Celtics Klubas Parduotas Uz Rekordine Suma

May 15, 2025

Lietuviai Neinvestuoti I Boston Celtics Klubas Parduotas Uz Rekordine Suma

May 15, 2025 -

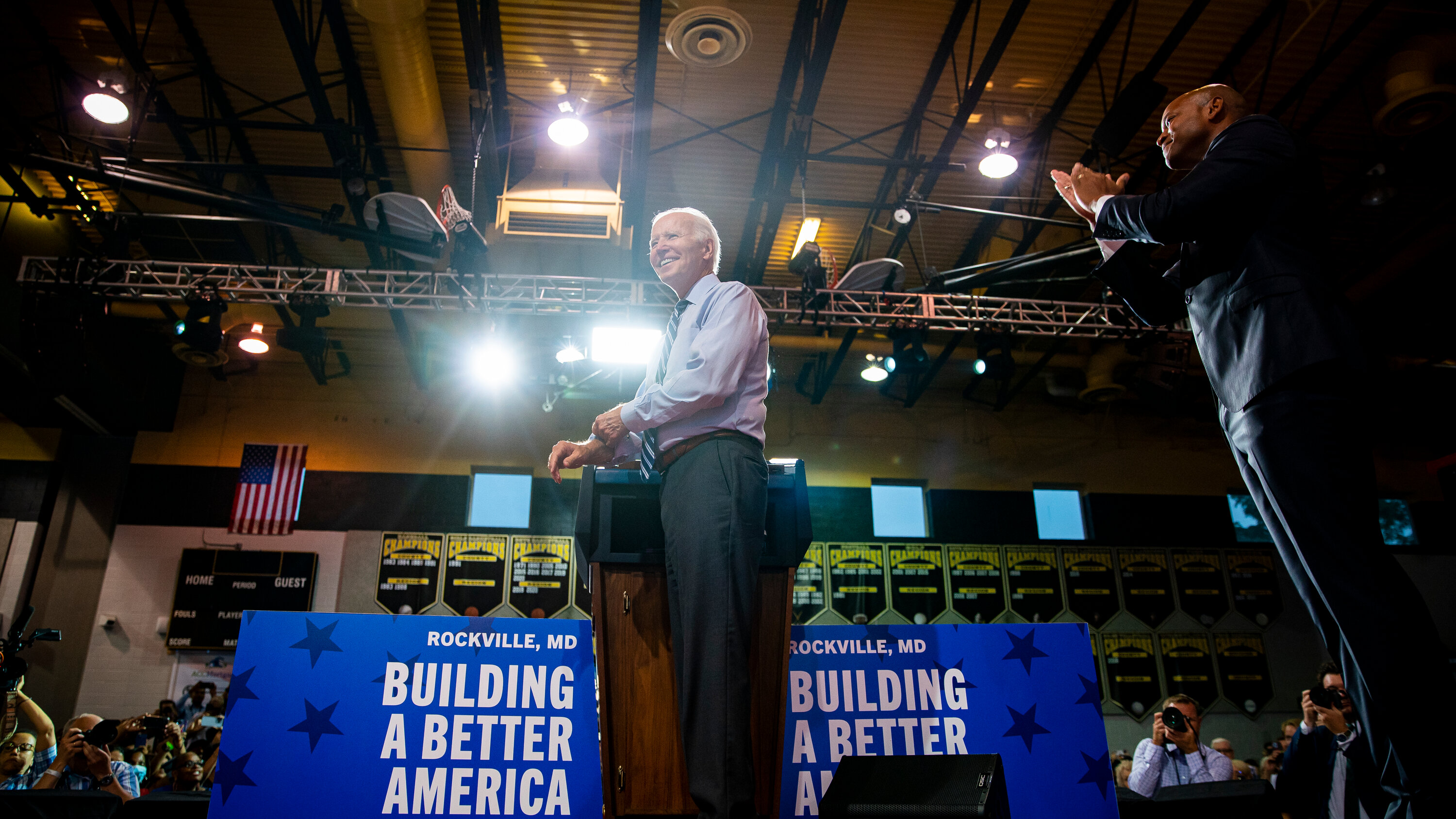

Examining President Bidens Responses To Allegations And Criticism

May 15, 2025

Examining President Bidens Responses To Allegations And Criticism

May 15, 2025