AI Therapy: Privacy Concerns And The Potential For Surveillance

Table of Contents

Data Security and Breaches in AI Therapy Platforms

Mental health data is exceptionally sensitive. It reveals intimate details about a person's thoughts, feelings, and behaviors—information that, if compromised, could have devastating consequences. The heightened risk of breaches in AI therapy platforms stems from several factors. AI systems, like any technology, are vulnerable to hacking and data theft. The sheer volume of sensitive personal data collected and stored by these platforms creates a large target for cybercriminals.

-

Examples of past data breaches in healthcare settings: Numerous high-profile healthcare data breaches have demonstrated the catastrophic consequences of inadequate security measures. These breaches not only lead to identity theft and financial losses but also cause significant emotional distress for patients whose private medical information is exposed.

-

The vulnerabilities of AI platforms to hacking and data theft: AI therapy platforms often rely on cloud-based storage and interconnected systems, increasing their vulnerability to sophisticated cyberattacks. Weaknesses in software security, lack of robust encryption, and insufficient user authentication can all create entry points for malicious actors.

-

The potential consequences of a data breach for patients (identity theft, emotional distress): A data breach involving mental health information can lead to identity theft, financial fraud, and social stigma. The emotional impact on patients can be particularly severe, potentially exacerbating existing mental health conditions or triggering new ones.

-

Lack of robust security measures in some AI therapy applications: Not all AI therapy applications prioritize data security to the same extent. Some may lack adequate encryption, fail to conduct regular security audits, or lack comprehensive incident response plans.

Keywords: AI therapy security, data breach, mental health data security, patient privacy, cybersecurity

Algorithmic Bias and Discrimination in AI Therapy

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms will inevitably perpetuate and even amplify those biases. This is a significant concern in AI therapy, where biased algorithms could lead to unfair or inaccurate diagnoses and treatment plans.

-

Examples of algorithmic bias in other sectors and its potential impact on mental health: Algorithmic bias has been documented in various sectors, from loan applications to criminal justice. In AI therapy, this could manifest as biased diagnoses, inappropriate treatment recommendations, or unequal access to care based on factors like race, gender, or socioeconomic status.

-

The lack of diversity in AI training datasets and its consequences: Many AI training datasets lack diversity, leading to algorithms that perform poorly or unfairly for certain demographic groups. This is particularly problematic in mental health, where diverse populations may experience unique challenges and require tailored interventions.

-

The risk of perpetuating existing societal biases through AI therapy: AI therapy has the potential to inadvertently reinforce existing inequalities if not carefully designed and implemented. For example, an algorithm trained on data primarily reflecting the experiences of one demographic group may not accurately assess or treat individuals from other groups.

-

The need for transparent and accountable AI algorithms: Transparency and accountability are crucial in mitigating algorithmic bias. Understanding how an AI algorithm arrives at its conclusions is vital for identifying and correcting biases.

Keywords: AI bias, algorithmic bias, AI ethics, fairness in AI, AI discrimination

The Potential for Surveillance and Data Misuse in AI Therapy

The collection and storage of sensitive personal information by AI therapy platforms raise concerns about potential surveillance and data misuse by third parties. The lack of clear regulations regarding the use of this data further exacerbates these risks.

-

The collection and storage of sensitive personal information: AI therapy platforms collect extensive data, including personal conversations, emotional expressions, and even physiological data if integrated with wearable sensors. This intimate data is highly valuable and vulnerable to misuse.

-

The potential for data sharing with insurance companies, employers, or law enforcement: The sharing of this sensitive data with third parties without explicit patient consent raises serious ethical and legal concerns. This data could be used for purposes unrelated to patient care, such as discrimination in employment or insurance.

-

The lack of clear regulations regarding the use of AI therapy data: The regulatory landscape surrounding the use of AI therapy data is still evolving. The absence of clear guidelines and robust regulations creates a vulnerability to data misuse and privacy violations.

-

The ethical implications of using AI therapy data for purposes beyond patient care: The use of AI therapy data for purposes beyond patient care, such as research or marketing, raises significant ethical questions about patient autonomy and informed consent.

Keywords: AI surveillance, data misuse, privacy violations, AI regulation, ethical AI

Mitigating Privacy Risks in AI Therapy

Mitigating the privacy risks associated with AI therapy requires a multi-faceted approach. This includes both technological solutions and robust regulatory frameworks.

-

Strong encryption and data anonymization techniques: Employing strong encryption protocols and data anonymization techniques are crucial for protecting patient data from unauthorized access.

-

Strict data governance policies and regulations: Clear and enforceable data governance policies are needed to ensure responsible data handling and prevent misuse. Robust regulations are necessary to establish clear boundaries and accountability.

-

Transparent data usage agreements with patients: Patients should have full transparency about how their data will be used and stored. Informed consent should be obtained before any data is collected or shared.

-

Independent audits of AI therapy platforms: Regular independent audits of AI therapy platforms can help identify security vulnerabilities and ensure compliance with data protection standards.

-

Promoting user control and data ownership: Patients should have control over their data, including the ability to access, correct, and delete their information.

Keywords: Data protection, AI privacy, data security, data governance, ethical AI development

Conclusion

AI therapy presents a transformative opportunity for mental healthcare, but it's crucial to address the significant privacy concerns and the potential for surveillance. The responsible development and implementation of AI therapy requires a strong focus on data security, algorithmic fairness, and transparent data governance. Without addressing these issues, the potential benefits of AI therapy may be overshadowed by the risks to patient privacy and well-being.

Call to Action: Let's advocate for responsible innovation in AI therapy, prioritizing patient privacy and data security. We must demand greater transparency and accountability from developers and providers of AI therapy solutions to ensure that this technology benefits all, without compromising individual rights. Learn more about protecting your privacy in the age of AI therapy and demand better data protection standards.

Featured Posts

-

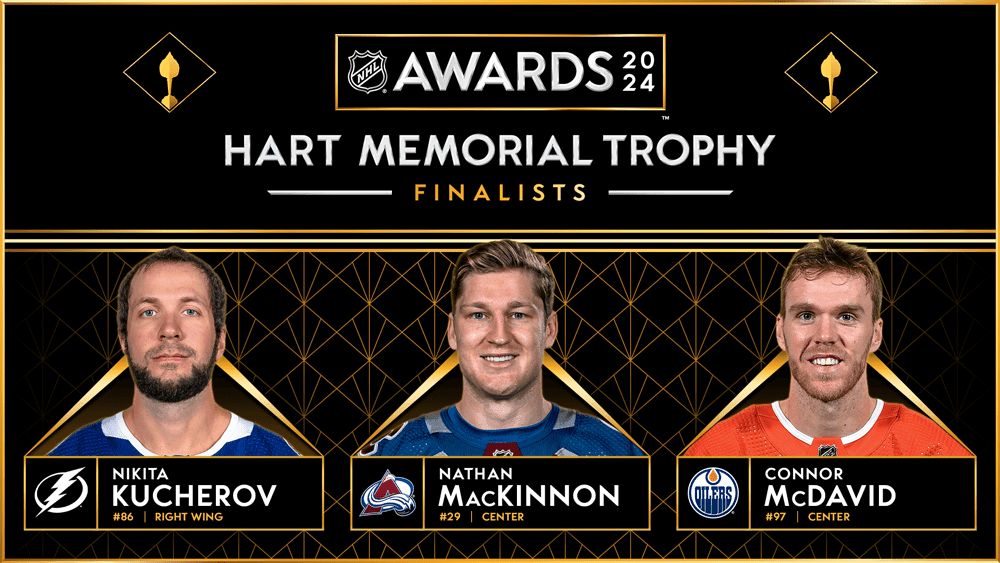

Analyzing The 2024 Hart Trophy Finalists Draisaitl Hellebuyck And Kucherov

May 16, 2025

Analyzing The 2024 Hart Trophy Finalists Draisaitl Hellebuyck And Kucherov

May 16, 2025 -

La Liga Hyper Motion Ver Almeria Eldense Online

May 16, 2025

La Liga Hyper Motion Ver Almeria Eldense Online

May 16, 2025 -

Ahy Buka Peluang Kerja Sama China Di Proyek Tembok Laut Raksasa

May 16, 2025

Ahy Buka Peluang Kerja Sama China Di Proyek Tembok Laut Raksasa

May 16, 2025 -

Star Wars Andor Tony Gilroys Perspective On The Series

May 16, 2025

Star Wars Andor Tony Gilroys Perspective On The Series

May 16, 2025 -

Investigating Water Contamination In Our Township

May 16, 2025

Investigating Water Contamination In Our Township

May 16, 2025