Algorithms, Radicalization, And Mass Violence: Who's To Blame?

Table of Contents

The Role of Algorithms in Spreading Extremist Ideologies

Algorithms, the complex sets of rules governing online content delivery, play a significant role in shaping what we see and how we interact online. This influence, however, can be profoundly detrimental when it comes to the spread of extremist ideologies.

Echo Chambers and Filter Bubbles

Social media algorithms, designed to maximize user engagement, often create echo chambers and filter bubbles. These personalized content feeds limit exposure to diverse viewpoints, reinforcing pre-existing beliefs and isolating users within homogenous online communities.

- Personalized feeds: Algorithms prioritize content aligning with a user's past behavior, leading to a skewed and potentially radicalized information diet.

- Lack of diverse perspectives: Users are less likely to encounter counterarguments or dissenting opinions, solidifying extremist viewpoints.

- Algorithmic opacity: The lack of transparency in how these algorithms operate makes it difficult to understand and address their contribution to online radicalization. This lack of algorithmic accountability is a major problem.

Examples abound of algorithms promoting radical content to susceptible individuals, inadvertently creating breeding grounds for extremism. The challenge lies in effectively moderating content without stifling free speech, a delicate balance requiring ongoing research and development.

Targeted Advertising and Recruitment

Extremist groups strategically utilize algorithms for targeted advertising and online recruitment. By analyzing user data, these groups identify vulnerable individuals and deliver tailored messages designed to radicalize them.

- Profiling vulnerable individuals: Algorithms effectively identify individuals exhibiting certain online behaviors or expressing specific interests that align with extremist ideologies.

- Propaganda dissemination: Targeted ads and personalized content feeds facilitate the spread of extremist propaganda and recruitment materials to specific demographics.

- Campaign effectiveness: The precision of algorithmic targeting enhances the effectiveness of recruitment campaigns, making them a potent tool for extremist groups.

Understanding how these targeted campaigns operate is crucial for developing countermeasures and disrupting the recruitment pipelines of extremist organizations. This includes scrutinizing the use of algorithmic targeting by social media platforms.

The Responsibility of Tech Companies

Tech companies bear a significant responsibility in mitigating the harmful effects of their algorithms. However, the complexity of the issue and the potential conflicts of interest present substantial challenges.

Lack of Accountability and Oversight

The current regulatory landscape is largely inadequate to address the ethical and societal implications of algorithmic bias. There's a significant lack of algorithmic accountability.

- Ethical responsibilities: Tech companies have a moral and social obligation to prevent the spread of extremist content on their platforms.

- Content moderation challenges: Moderating content at scale is a complex and resource-intensive task, often failing to keep pace with the rapid spread of extremist materials.

- Need for transparency and regulation: Greater transparency in algorithm design and deployment is needed, alongside stronger regulatory frameworks to ensure accountability. This is key to improving online safety.

The Profit Motive and Algorithmic Design

The pursuit of profit often conflicts with the imperative of ensuring online safety. Maximizing user engagement, a key metric for many tech companies, can inadvertently promote the spread of extremist content.

- Engagement vs. safety: Prioritizing user engagement over safety creates a potential conflict of interest, leading to algorithms that inadvertently amplify extremist viewpoints.

- Impact of engagement metrics: Algorithms optimized for engagement often prioritize sensational and emotionally charged content, which extremist groups frequently utilize.

- Ethical considerations: Tech companies must grapple with the ethical implications of their algorithmic design choices and prioritize the well-being of their users over profit maximization. This requires a commitment to corporate social responsibility.

The Individual's Role and Vulnerability

While algorithms contribute significantly to the problem, individual vulnerability and responsibility cannot be ignored.

Psychological Factors and Susceptibility

Certain psychological factors make individuals more susceptible to extremist ideologies. Algorithms can exacerbate these vulnerabilities by creating echo chambers and reinforcing biased narratives.

- Exacerbating vulnerabilities: Algorithms can amplify pre-existing biases and vulnerabilities, making individuals more receptive to extremist messaging.

- Critical thinking and media literacy: Developing critical thinking skills and media literacy is crucial in navigating the complex information landscape of the internet. This is critical for improving online safety.

Understanding these psychological factors is essential in developing targeted interventions and educational programs to promote resilience against online radicalization.

The Importance of Personal Responsibility

Individuals must take responsibility for critically evaluating online information and engaging in responsible online behavior.

- Responsible online behavior: Individuals should be mindful of the information they consume and share online, avoiding the uncritical acceptance of biased or extremist content.

- Self-awareness and critical analysis: Practicing self-awareness and critically analyzing the information encountered online is crucial in combating online radicalization. This falls under personal responsibility and improves information literacy.

Conclusion: Algorithms, Radicalization, and Mass Violence: Shared Responsibility

The relationship between Algorithms, Radicalization, and Mass Violence is multifaceted and complex. It's not a simple case of assigning blame, but rather understanding the shared responsibility among tech companies, individuals, and policymakers. While algorithms play a significant role in facilitating the spread of extremist ideologies, individual vulnerability and a lack of sufficient accountability across the board contribute significantly to the problem. We must engage in critical discussions about the ethical implications of algorithms, advocate for greater transparency and regulation from tech companies, and promote media literacy and critical thinking to combat online radicalization. Further research and exploration of Algorithms, Radicalization, and Mass Violence are crucial to developing effective strategies to mitigate this growing threat. Let's work together to create a safer and more responsible online environment.

Featured Posts

-

Grigor Dimitrov Ochakvaniyata Za Rolan Garos 2024

May 31, 2025

Grigor Dimitrov Ochakvaniyata Za Rolan Garos 2024

May 31, 2025 -

German City Offers Free Two Week Accommodation To Attract New Residents

May 31, 2025

German City Offers Free Two Week Accommodation To Attract New Residents

May 31, 2025 -

How Provincial Governments Can Speed Up Homebuilding

May 31, 2025

How Provincial Governments Can Speed Up Homebuilding

May 31, 2025 -

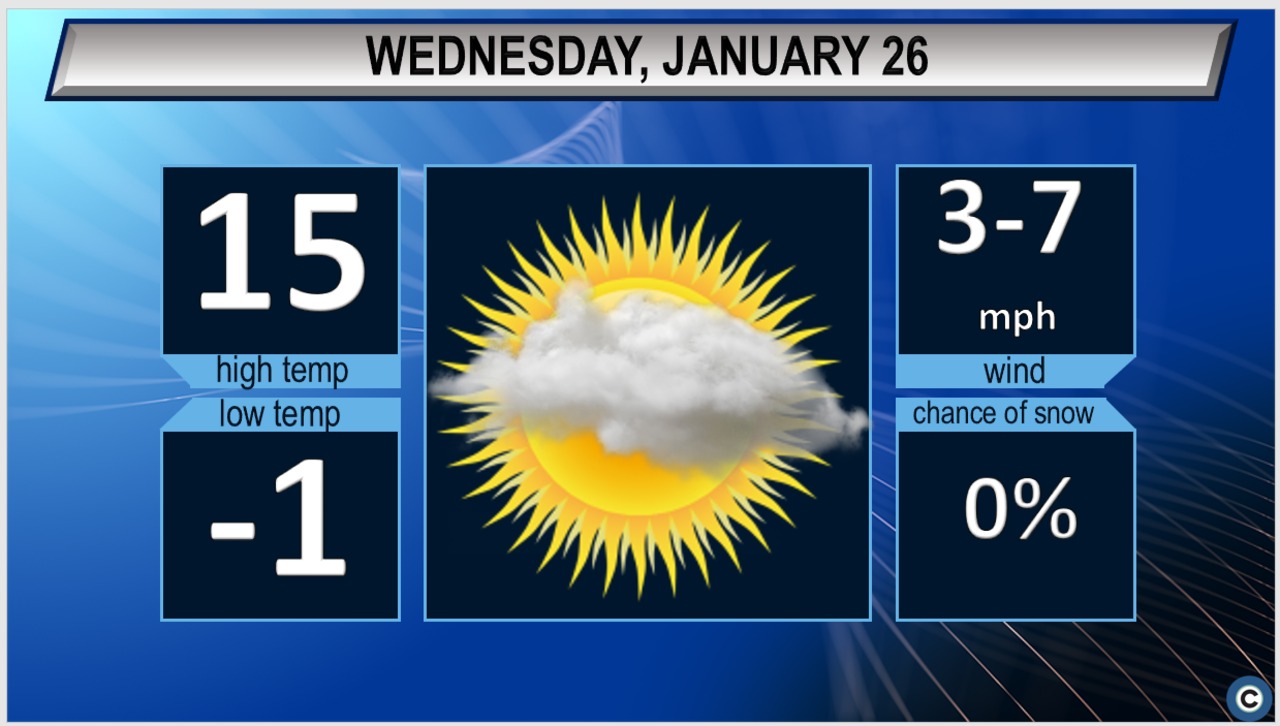

Clear Skies And Dry Weather Predicted For Northeast Ohio On Tuesday

May 31, 2025

Clear Skies And Dry Weather Predicted For Northeast Ohio On Tuesday

May 31, 2025 -

Tudor Pelagos Fxd Chrono Pink Everything We Know About The Release

May 31, 2025

Tudor Pelagos Fxd Chrono Pink Everything We Know About The Release

May 31, 2025