Building Voice Assistants Made Easy: OpenAI's Latest Tools And Technologies

Table of Contents

OpenAI's Whisper API: Revolutionizing Speech-to-Text Conversion

OpenAI's Whisper API is a game-changer in the field of speech-to-text conversion. Its ability to accurately transcribe speech, even in noisy environments and across multiple languages, significantly simplifies the foundational element of building voice assistants. This powerful tool forms the crucial first step in understanding user input.

Accuracy and Efficiency

Whisper boasts exceptional accuracy and efficiency compared to traditional speech-to-text solutions. Its robust architecture handles various accents and dialects with remarkable precision. The API's speed and low resource consumption make it ideal for real-time applications.

- Multilingual Support: Transcribes speech in numerous languages, expanding the potential reach of your voice assistant.

- Robust Noise Cancellation: Effectively filters out background noise, ensuring accurate transcriptions even in less-than-ideal acoustic environments.

- Adaptability to Different Accents: Handles variations in pronunciation with high accuracy, catering to a diverse user base.

Whisper significantly improves upon older technologies by leveraging advanced machine learning models. For instance, its ability to adapt to different speakers in real-time far surpasses the capabilities of previous generations of speech-to-text engines, leading to a more seamless user experience in building voice assistants.

Integrating Whisper into Your Voice Assistant

Integrating the Whisper API into your voice assistant project is surprisingly straightforward. OpenAI provides comprehensive documentation and numerous code examples to guide you through the process.

- Steps: Obtain an API key, send audio data to the API endpoint, and receive the transcribed text as a response.

- Libraries: Utilize readily available client libraries for your preferred programming language to simplify integration.

- Dependencies: Minimal dependencies are required, making the integration process efficient and streamlined.

The API's simple design and clear documentation make it an excellent choice for developers of all skill levels. The following simple Python example demonstrates the ease of integration (replace "YOUR_API_KEY" with your actual key):

import openai

import whisper

openai.api_key = "YOUR_API_KEY"

model = whisper.load_model("base") # Or a larger model for increased accuracy

result = model.transcribe("audio.wav")

print(result["text"])

Leveraging OpenAI's Language Models for Natural Language Understanding (NLU)

Once the user's voice is transcribed, understanding the intent behind their words is crucial. OpenAI's large language models (LLMs) excel at Natural Language Understanding (NLU), allowing your voice assistant to interpret complex commands and nuanced requests.

Understanding User Intent

OpenAI's LLMs empower your voice assistant to go beyond simple keyword matching. They provide sophisticated capabilities for understanding the context and intent within user requests.

- Intent Recognition: Accurately identifies the user's goal, such as setting an alarm, playing music, or searching for information.

- Entity Extraction: Extracts relevant information from the user's request, like specific times, locations, or names.

- Sentiment Analysis: Determines the emotional tone of the user's request, allowing for more empathetic and nuanced responses.

These functionalities contribute to a significantly more natural and intuitive user experience, moving beyond simple command-line interfaces to a truly conversational AI experience when building voice assistants.

Generating Natural-Sounding Responses

OpenAI's models not only understand user intent but also generate human-like responses. This capability is key to creating engaging and user-friendly voice assistants.

- Conversational AI: Enables natural back-and-forth interactions, making the user experience more fluid and enjoyable.

- Context Tracking: Maintains context throughout the conversation, allowing for more coherent and relevant responses.

- Dialogue Management: Handles complex conversations with multiple turns, ensuring a smooth and natural flow of interaction.

Tools and Resources for Building Voice Assistants with OpenAI

OpenAI provides a wealth of resources to aid developers in building their voice assistants. The comprehensive documentation and supportive community make the entire process more accessible.

OpenAI's Documentation and Community Support

OpenAI's documentation is exceptionally thorough, providing clear explanations, code examples, and tutorials. The active community forum offers a platform for collaboration, troubleshooting, and knowledge sharing.

- API Documentation: Detailed API reference guides provide comprehensive information on each function and parameter.

- Code Examples: Numerous code examples in various programming languages showcase different aspects of the APIs.

- Community Forums: A vibrant online community provides a space for developers to ask questions, share insights, and collaborate on projects.

Active participation in the OpenAI community is crucial for navigating challenges and accelerating development when building voice assistants.

Third-Party Integrations and Libraries

Many third-party tools and libraries seamlessly integrate with OpenAI's APIs, further enhancing your voice assistant's functionality.

- Speech Synthesis Libraries: Libraries like those for text-to-speech conversion enhance the assistant's ability to provide audio responses.

- Natural Language Processing (NLP) Tools: Tools that extend the capabilities of the LLMs for advanced language understanding.

- Cloud Platforms: Cloud services provide scalable infrastructure for deploying your voice assistant.

Leveraging these pre-built components can drastically reduce development time and streamline the entire process.

Conclusion

Building voice assistants has become significantly easier and more efficient thanks to OpenAI's powerful tools and technologies. The combination of Whisper's accurate speech-to-text capabilities and the robust natural language processing prowess of OpenAI's LLMs provides a powerful foundation for developing cutting-edge voice interfaces. The readily available documentation, supportive community, and integration with third-party tools further streamline the development process.

Ready to revolutionize your voice assistant development? Dive into OpenAI's powerful tools today and experience the ease and efficiency of building cutting-edge voice interfaces. Start building your voice assistant now!

Featured Posts

-

Red Sox Vs Blue Jays Lineups Walker Buehlers Start And Outfielders Return

Apr 28, 2025

Red Sox Vs Blue Jays Lineups Walker Buehlers Start And Outfielders Return

Apr 28, 2025 -

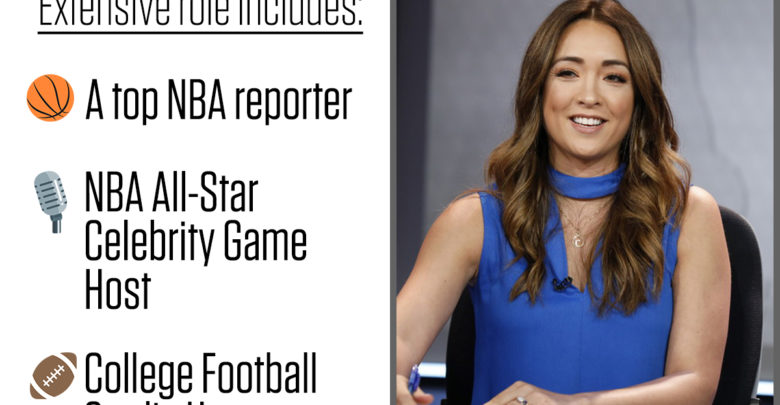

Cassidy Hubbarths Espn Legacy Celebrated In Final Broadcast

Apr 28, 2025

Cassidy Hubbarths Espn Legacy Celebrated In Final Broadcast

Apr 28, 2025 -

Wallace Misses Out On Second At Martinsville Due To Final Restart

Apr 28, 2025

Wallace Misses Out On Second At Martinsville Due To Final Restart

Apr 28, 2025 -

Monstrous Beauty A Feminist Reimagining Of Chinoiserie At The Met

Apr 28, 2025

Monstrous Beauty A Feminist Reimagining Of Chinoiserie At The Met

Apr 28, 2025 -

127 Years Of Brewing History Ends Anchor Brewing Companys Closure

Apr 28, 2025

127 Years Of Brewing History Ends Anchor Brewing Companys Closure

Apr 28, 2025