Building Voice Assistants: OpenAI's New Tools Unveiled

Table of Contents

OpenAI's Whisper API: Revolutionizing Speech-to-Text

OpenAI's Whisper API is a game-changer in the world of speech-to-text. This powerful tool offers unparalleled accuracy and efficiency, making it an ideal solution for integrating voice recognition into various applications. Keywords related to this section include Whisper API, speech-to-text, OpenAI Whisper, voice transcription, accurate transcription, real-time transcription, and multilingual support.

-

Exceptional Accuracy: Whisper boasts exceptional accuracy in transcribing speech across multiple languages, significantly outperforming many existing solutions. This accuracy extends to handling diverse accents and dialects, making it a truly global solution.

-

Robust Noise Handling: Unlike many other speech-to-text APIs, Whisper is remarkably robust in handling background noise. This is crucial for real-world applications where perfect audio conditions are rarely achievable.

-

Cost-Effective Solution: The Whisper API provides a cost-effective way to integrate high-quality speech-to-text capabilities into your projects, offering competitive pricing and scalable usage options.

-

Wide-Ranging Applications: The potential applications of Whisper are vast. From powering customer service chatbots and virtual assistants to enabling accurate medical transcription and facilitating real-time language translation, the possibilities are limitless.

Whisper's technical prowess lies in its advanced deep learning architecture, specifically trained on a massive dataset of multilingual and multi-task audio. This allows it to not only transcribe speech accurately but also to identify and timestamp different speakers, making it incredibly versatile for various applications. While detailed code examples are beyond the scope of this article, OpenAI provides extensive documentation to guide developers through the implementation process.

Fine-tuning Language Models for Conversational AI

Building engaging and truly conversational AI requires more than just accurate speech recognition. This is where OpenAI's fine-tuning capabilities for language models come into play. Keywords relevant here include Conversational AI, language models, fine-tuning, OpenAI models, chatbot development, AI chatbots, natural language understanding, and dialogue management.

-

Leveraging Pre-trained Models: Developers can leverage powerful pre-trained models like GPT-3 and GPT-4 as a foundation for their voice assistants. These models provide a robust base of language understanding and generation capabilities.

-

Customization through Fine-tuning: The real power lies in fine-tuning these pre-trained models with custom data. This allows developers to tailor the conversational style, personality, and knowledge base of their AI to precisely match their needs.

-

Contextual Awareness: Fine-tuning allows for the creation of context-aware conversations. The AI can retain information from previous turns in the dialogue, leading to more natural and engaging interactions.

-

Addressing Conversational Challenges: Challenges like maintaining context across multiple turns and handling unexpected user inputs are addressed through careful data selection and training techniques.

By using OpenAI's tools, developers can craft unique conversational AI personalities for their voice assistants. The process involves selecting a suitable base model, preparing a high-quality dataset reflecting the desired conversational style, and then iteratively fine-tuning the model to achieve optimal performance. The quality and quantity of training data are crucial for achieving natural and fluent dialogue.

Advanced Features for Enhanced User Experience

Building a truly exceptional voice assistant requires more than just basic conversation. Advanced features are key to creating a positive user experience. Keywords for this section include voice assistant features, user experience, natural language generation, intent recognition, personalized responses, and proactive assistance.

-

Intent Recognition: Accurately understanding user intent is paramount. OpenAI's tools facilitate the implementation of robust intent recognition systems, allowing the voice assistant to correctly interpret user requests, even with variations in phrasing.

-

Natural Language Generation: The ability to generate natural and human-like responses is crucial for a positive user experience. OpenAI's language models excel at this, producing fluent and engaging conversational outputs.

-

Personalization: Personalizing the user experience based on past interactions and individual preferences can significantly enhance user satisfaction. OpenAI's tools enable developers to implement personalization features that make the voice assistant feel more tailored to the user.

-

Proactive Assistance: Anticipating user needs and offering proactive assistance is a hallmark of a truly intelligent voice assistant. This level of sophistication can significantly improve efficiency and user satisfaction.

OpenAI's Ecosystem for Voice Assistant Development

OpenAI provides a comprehensive ecosystem to support developers throughout the entire process of building voice assistants. Relevant keywords are OpenAI ecosystem, developer tools, AI development platform, community support, documentation, and tutorials.

-

Comprehensive Documentation: OpenAI offers detailed documentation and tutorials, making it easier for developers of all skill levels to get started.

-

Active Community Support: A vibrant community of developers provides a platform for collaboration, troubleshooting, and sharing best practices.

-

Seamless Integration: OpenAI's services integrate seamlessly, simplifying the development workflow and allowing developers to leverage various OpenAI tools effectively.

-

Testing and Deployment: OpenAI provides tools and resources to help developers thoroughly test and deploy their voice assistant applications.

Conclusion:

OpenAI's new tools are significantly lowering the barrier to entry for building sophisticated voice assistants. By leveraging the power of the Whisper API and advanced language models, developers can create highly accurate, engaging, and personalized voice experiences. The comprehensive ecosystem offered by OpenAI further simplifies the development process. Start building your own innovative voice assistant today using OpenAI's powerful tools and contribute to the exciting future of voice interaction. Explore the possibilities of building voice assistants with OpenAI and unlock a world of conversational AI!

Featured Posts

-

The Shift In Brazils Auto Industry Byds Electric Vehicle Push And Fords Retreat

May 13, 2025

The Shift In Brazils Auto Industry Byds Electric Vehicle Push And Fords Retreat

May 13, 2025 -

Megan Thee Stallion Seeks Contempt Charges Against Tory Lanez

May 13, 2025

Megan Thee Stallion Seeks Contempt Charges Against Tory Lanez

May 13, 2025 -

A Szerelem Moegoett 5 1 Filmes Par Akik Titkolt Ellensegeskedest Folytattak A Forgatason

May 13, 2025

A Szerelem Moegoett 5 1 Filmes Par Akik Titkolt Ellensegeskedest Folytattak A Forgatason

May 13, 2025 -

The Wonder Of Animals From Tiny Insects To Majestic Mammals

May 13, 2025

The Wonder Of Animals From Tiny Insects To Majestic Mammals

May 13, 2025 -

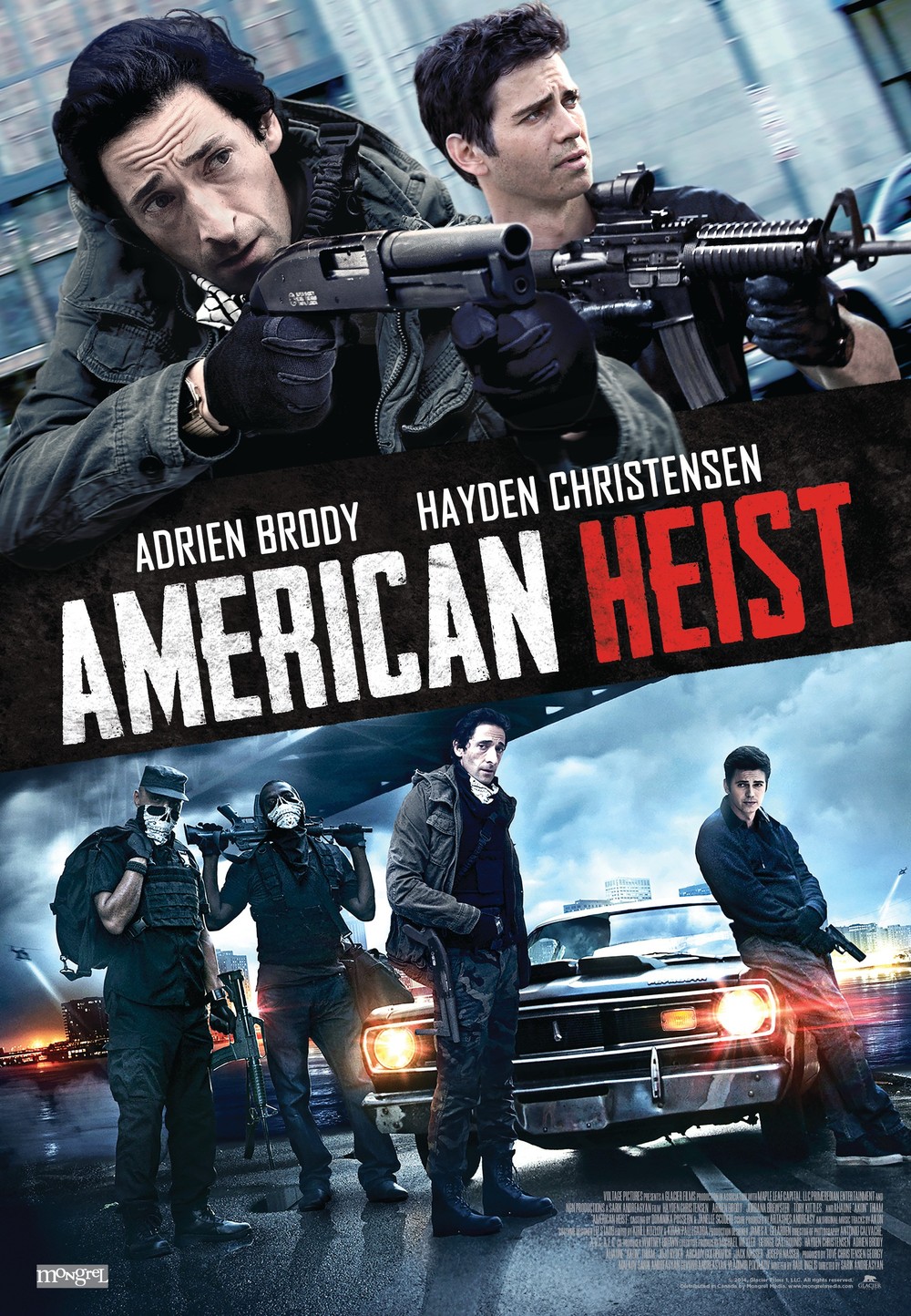

Amazon Primes New Heist Film A Sequel To The Beloved Classic

May 13, 2025

Amazon Primes New Heist Film A Sequel To The Beloved Classic

May 13, 2025

Latest Posts

-

Budapest Tommy Fury Visszavag Jake Paulnak Kepek

May 14, 2025

Budapest Tommy Fury Visszavag Jake Paulnak Kepek

May 14, 2025 -

Tommy Fury Budapest Eles Valasz Jake Paulnak Exkluziv Fotok

May 14, 2025

Tommy Fury Budapest Eles Valasz Jake Paulnak Exkluziv Fotok

May 14, 2025 -

Former Jake Paul Rival Mocks Anthony Joshua Fight Claims Paul Responds

May 14, 2025

Former Jake Paul Rival Mocks Anthony Joshua Fight Claims Paul Responds

May 14, 2025 -

Jake Paul Vs Tommy Fury Budapesten Folytatodik A Rivalizalas

May 14, 2025

Jake Paul Vs Tommy Fury Budapesten Folytatodik A Rivalizalas

May 14, 2025 -

Tommy Fury Budapesten Valasz Jake Paulnak Fotok

May 14, 2025

Tommy Fury Budapesten Valasz Jake Paulnak Fotok

May 14, 2025