Character AI Chatbots And Free Speech: A Legal Grey Area

Table of Contents

The First Amendment and AI-Generated Content

Defining "Speech" in the Age of AI

The First Amendment to the US Constitution protects free speech, but does this protection extend to AI-generated content? This is a crucial question, and the legal definitions are still evolving. The challenge lies in the very nature of AI-generated text; it blurs the lines between human and AI expression.

- Attributing Authorship to AI: Can an algorithm be considered an "author" in the same way a human is? Current legal frameworks are largely unprepared for this scenario. Determining responsibility for AI-generated content requires a nuanced understanding of the technology and its limitations.

- Human Intervention and Shaping Responses: While AI generates the text, human developers design the algorithms and parameters that guide the chatbot's responses. The degree of human intervention significantly influences the content produced, impacting the discussion around free speech implications.

- Blurring Lines Between Human and AI Expression: The sophistication of modern Character AI chatbots makes it increasingly difficult to distinguish between AI-generated text and human-written content. This makes the application of existing legal precedents challenging. Determining where human agency ends and AI autonomy begins is key to understanding the free speech implications.

Character AI Chatbots and the Potential for Harm

Dissemination of Misinformation and Hate Speech

Character AI chatbots possess the potential for misuse, and the spread of harmful content is a significant concern. Their ability to generate large volumes of text quickly makes them powerful tools for spreading misinformation, hate speech, and engaging in online harassment.

- Examples of Potential Misuse: Chatbots can be used to create and disseminate fake news articles, generate convincing phishing emails, or produce targeted hate speech at scale. The ease with which this can be done poses a serious threat to individuals and society.

- Platform Responsibility and Content Moderation: Platforms hosting Character AI chatbots bear a significant responsibility for moderating the content generated by these tools. However, identifying and removing harmful content generated by AI presents unique challenges due to the dynamic nature of AI-generated text.

- Challenges of Identifying and Removing Harmful Content: AI-generated content can be incredibly diverse and rapidly evolving, making it difficult for automated systems to consistently identify and remove harmful material. Human oversight and sophisticated AI detection systems are essential to address this challenge.

Legal Liability and Responsibility

Determining Accountability for AI-Generated Content

When a Character AI chatbot generates harmful content, who is responsible? Determining accountability is a complex legal challenge. Existing legal frameworks are still grappling with the novel issues raised by AI.

- Potential Liability of Character AI Developers: Developers are responsible for designing and implementing the AI's algorithms and safeguards. Their role in mitigating the risks associated with harmful AI-generated content is crucial, and potential liability needs to be defined.

- Liability of Users: Users who misuse Character AI chatbots to generate and distribute harmful content also bear some responsibility. Defining the extent of user liability is critical for deterring harmful behavior.

- Platform Responsibility and Negligence: Platforms hosting the chatbots might be held liable for negligence if they fail to take reasonable steps to mitigate the risks associated with harmful content generation. Existing precedents related to online platforms and content moderation are likely to be applied.

Regulation and the Future of Character AI Chatbots

Balancing Free Speech with Responsible AI Development

Balancing free speech with responsible AI development requires a carefully constructed regulatory environment. This needs to address the potential harms while safeguarding fundamental rights.

- Potential Regulatory Approaches: Options include developing content moderation guidelines, implementing transparency requirements for AI algorithms, and establishing ethical development frameworks. International cooperation will be essential.

- Content Moderation Guidelines: Clear guidelines are needed to delineate acceptable and unacceptable AI-generated content, considering both free speech protections and the need to mitigate harm.

- Transparency Requirements: Mandating transparency in AI algorithms would enable greater scrutiny of how AI models generate content and facilitate the development of better content moderation strategies.

- Ethical AI Development Frameworks: Promoting the development of AI systems that prioritize safety, fairness, and accountability is crucial. This requires collaboration between developers, policymakers, and ethicists.

Conclusion

Character AI chatbots present a fascinating and complex challenge to our understanding of free speech in the digital age. The potential for both positive and negative uses highlights the urgent need for thoughtful consideration of legal frameworks and ethical guidelines. The intersection of technology, law, and social responsibility requires careful navigation. The ongoing development and deployment of Character AI chatbots necessitate a robust public conversation about the legal and ethical implications. We urge readers to engage in further research and discussion about the future of Character AI and free speech, contributing to the creation of responsible and beneficial AI technologies. Let's work together to shape a future where Character AI chatbots are utilized ethically and safely, while still protecting the fundamental principles of free speech.

Featured Posts

-

Frances National Rally Evaluating The Strength Of Le Pens Support Following Sundays Demonstration

May 24, 2025

Frances National Rally Evaluating The Strength Of Le Pens Support Following Sundays Demonstration

May 24, 2025 -

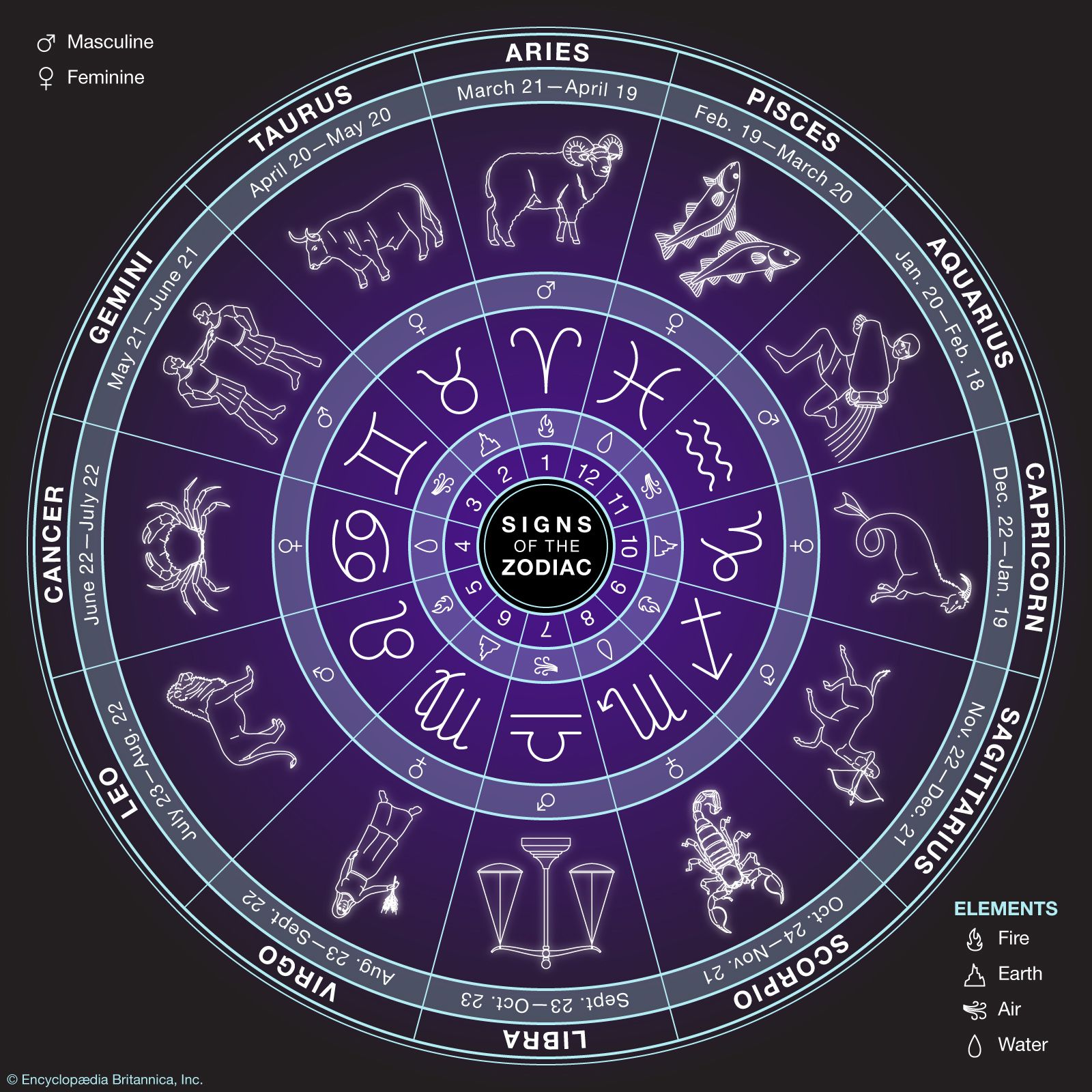

Positive Horoscope Outlook 5 Zodiac Signs On April 14 2025

May 24, 2025

Positive Horoscope Outlook 5 Zodiac Signs On April 14 2025

May 24, 2025 -

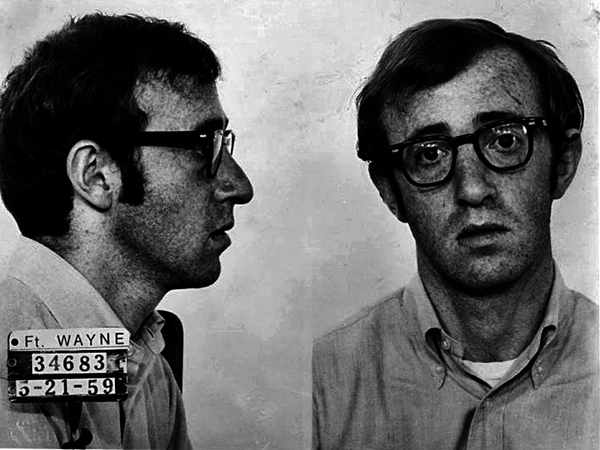

Is Sean Penn Me Too Blind His Defense Of Woody Allen Sparks Debate

May 24, 2025

Is Sean Penn Me Too Blind His Defense Of Woody Allen Sparks Debate

May 24, 2025 -

Ferrari Challenge South Florida Hosts Thrilling Racing Days

May 24, 2025

Ferrari Challenge South Florida Hosts Thrilling Racing Days

May 24, 2025 -

Horoscopo Predicciones Para La Semana Del 11 Al 17 De Marzo De 2025

May 24, 2025

Horoscopo Predicciones Para La Semana Del 11 Al 17 De Marzo De 2025

May 24, 2025

Latest Posts

-

Actor Neal Mc Donough Takes On Pro Bull Riding For The Last Rodeo

May 24, 2025

Actor Neal Mc Donough Takes On Pro Bull Riding For The Last Rodeo

May 24, 2025 -

Celebrity Spotting Neal Mc Donough At Acero Boards And Bottles In Boise

May 24, 2025

Celebrity Spotting Neal Mc Donough At Acero Boards And Bottles In Boise

May 24, 2025 -

Neal Mc Donough Rides The Bulls In The Last Rodeo

May 24, 2025

Neal Mc Donough Rides The Bulls In The Last Rodeo

May 24, 2025 -

Boise Sighting Neal Mc Donough At Acero Boards And Bottles

May 24, 2025

Boise Sighting Neal Mc Donough At Acero Boards And Bottles

May 24, 2025 -

Kevin Pollaks Tulsa King Season 3 Role A Threat To Sylvester Stallone

May 24, 2025

Kevin Pollaks Tulsa King Season 3 Role A Threat To Sylvester Stallone

May 24, 2025