ChatGPT And OpenAI: Facing FTC Investigation For Potential Privacy Violations

Table of Contents

The FTC's Investigation: What We Know So Far

The Federal Trade Commission (FTC) is the U.S. government agency responsible for protecting consumers by preventing anticompetitive, deceptive, and unfair business practices. Its authority extends to investigating privacy violations, particularly concerning the collection, use, and storage of personal data. The FTC's investigation into OpenAI and ChatGPT centers on allegations of potential violations of the FTC Act, which prohibits unfair or deceptive acts or practices in commerce.

Specific allegations against OpenAI and ChatGPT include:

- Unlawful data collection practices: Concerns exist about the breadth and nature of data collected by ChatGPT, and whether users are fully informed about what data is being gathered and how it's used. This includes concerns about the collection of IP addresses, browsing history, and potentially sensitive personal information inadvertently revealed in user prompts.

- Insufficient data security measures: Questions have been raised about the security protocols in place to protect the vast amounts of user data handled by OpenAI. The potential for data breaches and unauthorized access poses significant risks to user privacy.

- Failure to comply with COPPA (if applicable to child users): The Children's Online Privacy Protection Act (COPPA) sets specific requirements for handling children's data online. If ChatGPT has been used by minors, concerns may arise regarding OpenAI's compliance with these regulations.

- Lack of transparency regarding data usage: Critics argue that OpenAI lacks sufficient transparency about how user data is utilized, potentially for training models, targeted advertising, or other purposes that might not be fully disclosed to users.

The FTC has yet to release a comprehensive public statement detailing the full scope of its investigation. Similarly, OpenAI has acknowledged the investigation but hasn't provided specific details about its responses. [Link to relevant news article 1] [Link to relevant news article 2] [Link to relevant FTC document, if available].

ChatGPT's Data Handling Practices and Potential Risks

ChatGPT's functionality relies on processing vast amounts of user data, including conversation history, prompts, and responses. This data is used to train and improve the AI model, enabling it to generate more relevant and coherent outputs. However, this data handling practice presents several potential risks:

- Data breaches and unauthorized access: A successful breach of OpenAI's systems could expose a massive amount of sensitive user data, leading to identity theft, financial fraud, or other harmful consequences.

- Potential for misuse of personal information: The data collected by ChatGPT could potentially be misused for purposes beyond what users anticipate or consent to.

- Profiling and discriminatory outcomes: The data used to train the model could reflect existing societal biases, leading to discriminatory outcomes or unfair treatment of certain user groups.

- The impact on user anonymity: The nature of the data collected and its use in model training raise concerns about the ability of users to maintain their anonymity.

Data minimization and purpose limitation are crucial principles in data protection. OpenAI should only collect the minimum necessary data and use it solely for the specified purpose. Robust data security best practices, including encryption, access controls, and regular security audits, are essential to mitigate the risks associated with the handling of sensitive user data in AI chatbots.

The Broader Implications for AI and Data Privacy

The FTC investigation carries significant implications for the AI industry as a whole. It underscores the need for greater regulatory scrutiny of AI companies and their data handling practices.

- Increased regulatory scrutiny of AI companies: We can expect stricter regulations and increased oversight from regulatory bodies worldwide.

- Potential for new data privacy laws and regulations: This investigation could catalyze the development of more comprehensive data privacy laws specifically tailored to the unique challenges posed by AI systems.

- The need for greater transparency and accountability in AI systems: The investigation highlights the urgent need for AI companies to be more transparent about their data practices and accountable for potential harms caused by their technologies.

- The impact on consumer trust in AI technology: If privacy concerns are not adequately addressed, it could erode consumer trust and hinder the widespread adoption of AI technologies.

The ethical considerations surrounding AI and data privacy are paramount. Developing and deploying AI systems responsibly requires careful consideration of potential societal impacts, including fairness, accountability, and transparency.

What Users Can Do to Protect Their Privacy When Using ChatGPT

While the responsibility for data protection rests primarily with OpenAI, users can also take steps to mitigate their own privacy risks:

- Avoid sharing sensitive personal information: Refrain from entering highly personal or confidential data into ChatGPT prompts.

- Review and adjust privacy settings: Familiarize yourself with OpenAI's privacy policy and settings, and adjust them to reflect your preferences.

- Be mindful of the data you input: Think carefully about what information you are sharing, as even seemingly innocuous data could be used to infer sensitive details.

- Consider using privacy-enhancing technologies (PETs): Explore technologies that can help enhance your privacy while using online services, such as VPNs or privacy-focused browsers.

Conclusion

The FTC investigation into ChatGPT and OpenAI highlights the critical need for robust data privacy protections in the rapidly evolving landscape of artificial intelligence. The potential implications are far-reaching, impacting not only OpenAI but also the entire AI industry. By understanding the potential risks associated with ChatGPT privacy concerns and taking proactive steps to protect personal information, users can contribute to a safer and more responsible AI ecosystem. Stay informed about the ongoing ChatGPT privacy issues and advocate for strong data protection regulations to ensure the responsible development and use of AI technologies.

Featured Posts

-

Sulm Vdekjeprures Me Thike Ne Ceki Detajet E Ngjarjes

May 03, 2025

Sulm Vdekjeprures Me Thike Ne Ceki Detajet E Ngjarjes

May 03, 2025 -

3 Arena Concert Loyle Carner Announces Dublin Show

May 03, 2025

3 Arena Concert Loyle Carner Announces Dublin Show

May 03, 2025 -

End Of An Era The Justice Department And School Desegregation

May 03, 2025

End Of An Era The Justice Department And School Desegregation

May 03, 2025 -

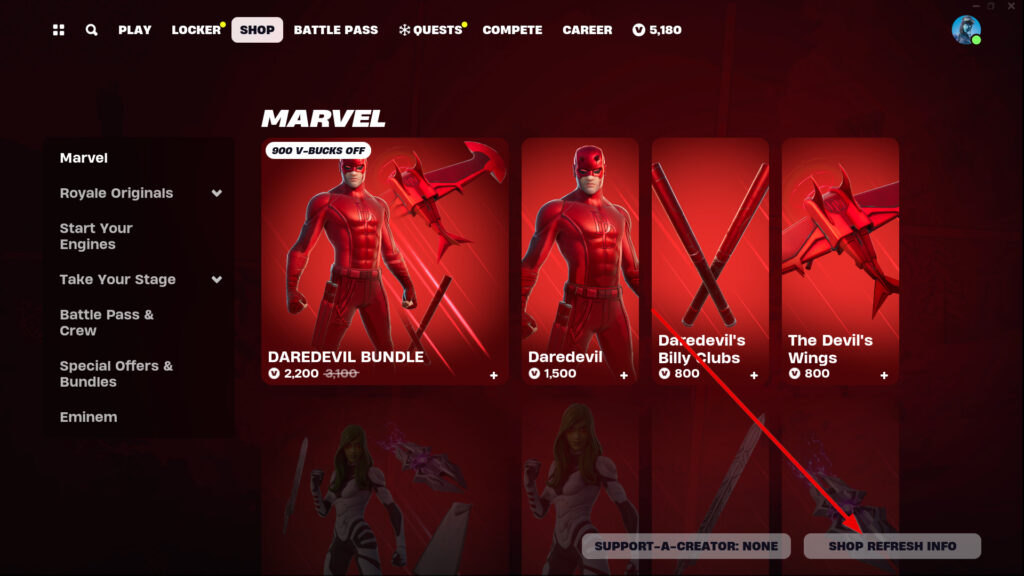

Fortnite Item Shop Gets A Useful New Feature

May 03, 2025

Fortnite Item Shop Gets A Useful New Feature

May 03, 2025 -

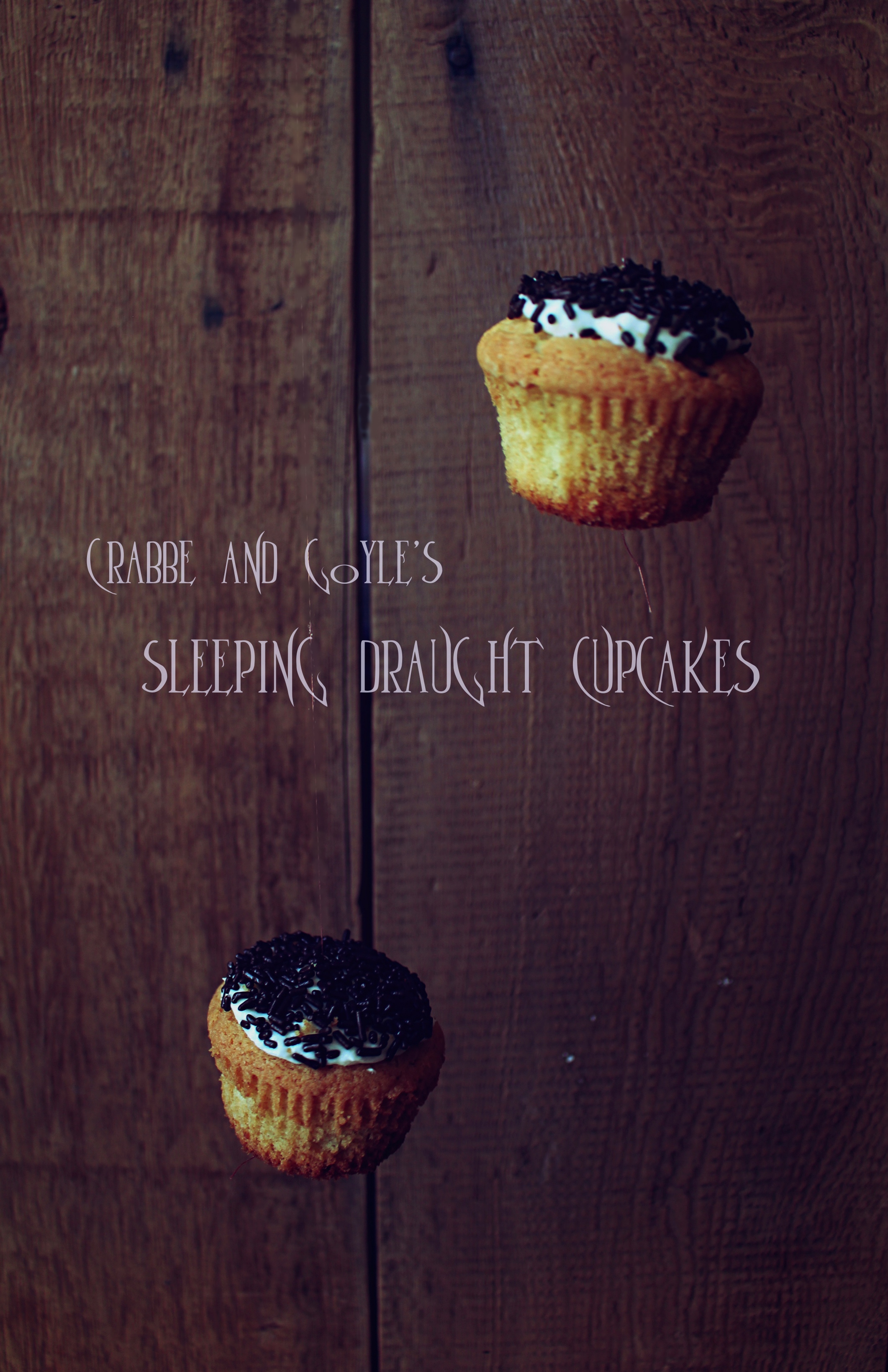

Harry Potters Crabbe A Shocking Transformation

May 03, 2025

Harry Potters Crabbe A Shocking Transformation

May 03, 2025

Latest Posts

-

The Monkeys Legacy Pressure Mounts On The 666 M Horror Franchise Reboot

May 04, 2025

The Monkeys Legacy Pressure Mounts On The 666 M Horror Franchise Reboot

May 04, 2025 -

The Return Of Final Destinations Most Iconic Death

May 04, 2025

The Return Of Final Destinations Most Iconic Death

May 04, 2025 -

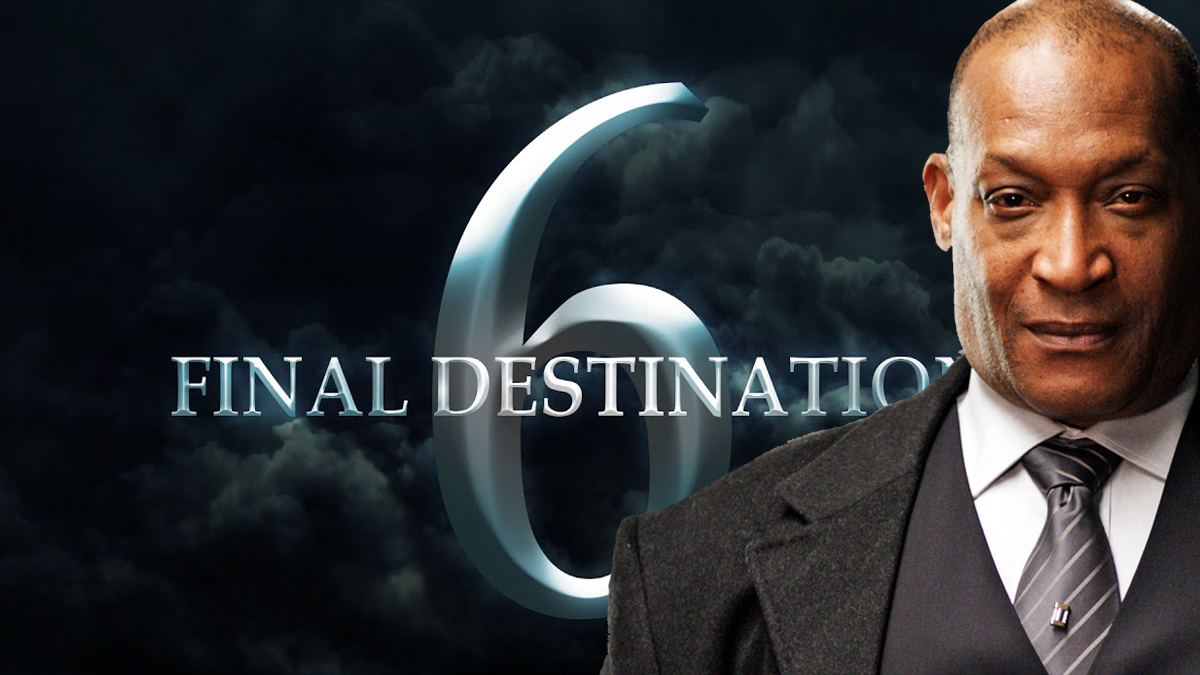

Tony Todds Final Destination Bloodlines Return A Look Back

May 04, 2025

Tony Todds Final Destination Bloodlines Return A Look Back

May 04, 2025 -

The Monkey Sets A High Bar Expectations For The 666 M Horror Franchise Reboot

May 04, 2025

The Monkey Sets A High Bar Expectations For The 666 M Horror Franchise Reboot

May 04, 2025 -

The Final Destination Franchise A Look At The Worldwide Box Office Performance And The Bloodline Trailer

May 04, 2025

The Final Destination Franchise A Look At The Worldwide Box Office Performance And The Bloodline Trailer

May 04, 2025