Develop Voice Assistants With OpenAI's New Tools (2024)

Table of Contents

Understanding OpenAI's Relevant Tools for Voice Assistant Development

Developing a robust voice assistant requires a multifaceted approach, and OpenAI provides a powerful suite of tools to handle the key components. Let's explore the essential building blocks.

Leveraging OpenAI's Whisper API for Speech-to-Text Conversion

Accurate and efficient speech-to-text conversion is the foundation of any voice assistant. OpenAI's Whisper API excels in this area, offering several key advantages:

- High accuracy rates: Whisper boasts impressive accuracy, even in noisy environments and with diverse accents. This is crucial for creating a reliable and user-friendly experience.

- Multilingual support: Whisper supports a wide range of languages, making your voice assistant accessible to a global audience. This significantly expands the potential user base.

- Real-time transcription capabilities: For many applications, real-time transcription is essential. Whisper's ability to provide near-instantaneous results is a game-changer.

- Cost-effectiveness: Compared to other speech-to-text solutions, Whisper offers a competitive pricing model, making it accessible to developers with varying budgets.

Use cases for Whisper's capabilities include handling diverse accents in customer service applications, transcribing audio from meetings in noisy conference rooms, and creating live captioning tools.

Utilizing OpenAI's GPT Models for Natural Language Understanding (NLU)

Once the voice input is transcribed, you need to understand its meaning. This is where OpenAI's GPT models shine. They power the conversational intelligence of your voice assistant by providing:

- Contextual understanding: GPT models can grasp the context of a conversation, remembering previous interactions and adapting their responses accordingly.

- Intent recognition: They can accurately identify the user's intentions, even with ambiguous phrasing or complex queries.

- Entity extraction: GPT models can extract key information from the user's input, such as names, dates, and locations, crucial for task completion.

- Generating human-like responses: They produce natural and engaging responses, making the interaction feel more human.

- Handling complex queries: GPT models can handle multi-turn conversations and complex queries that require multiple steps to resolve.

Different GPT model versions (like GPT-3.5-turbo, GPT-4) offer varying levels of performance and cost. Choosing the right model is crucial for balancing performance and budget.

Integrating OpenAI's Text-to-Speech (TTS) APIs for Realistic Voice Output

The final piece of the puzzle is converting the text response back into speech. OpenAI's TTS APIs (or partner integrations) provide:

- Different voice options: You can select from a range of voices, each with its own unique characteristics, allowing you to tailor the experience.

- Customization capabilities: Some APIs allow you to customize the voice's intonation, speed, and other parameters for a truly personalized feel.

- Emotional expression in speech synthesis: Advanced APIs can infuse emotion into the synthesized speech, making the interaction more engaging.

- Seamless integration with other OpenAI tools: The APIs are designed to work seamlessly with other OpenAI tools, simplifying the development process.

Partner integrations may offer additional voice options and customization features, expanding your creative options.

Step-by-Step Guide to Building a Simple Voice Assistant with OpenAI

Let's build a basic voice assistant to demonstrate the process.

Setting up Your Development Environment

Before you start coding, you'll need:

- Python installation: Ensure you have Python installed on your system.

- Required libraries: Install the

openaiandrequestslibraries using pip (pip install openai requests). - Obtaining API keys: Sign up for an OpenAI account and obtain API keys for accessing their services.

Coding the Core Functionality (Speech-to-Text, NLU, Text-to-Speech)

This simplified example demonstrates the core functionality:

import openai

import speech_recognition as sr

# ... (API key setup and other configurations) ...

r = sr.Recognizer()

with sr.Microphone() as source:

audio = r.listen(source)

text = r.recognize_google(audio) # Or use Whisper API here

response = openai.Completion.create(

engine="text-davinci-003", # Or a suitable GPT model

prompt=text,

max_tokens=150,

n=1,

stop=None,

temperature=0.7,

)

generated_text = response.choices[0].text.strip()

# ... (Text-to-speech conversion using a suitable API) ...

This code snippet provides a basic framework. You'll need to adapt it based on your chosen TTS API and the specifics of the Whisper API integration.

Testing and Iterative Improvement

Thorough testing is critical:

- Unit testing: Test individual components (speech-to-text, NLU, TTS) separately.

- User acceptance testing: Gather feedback from real users to identify areas for improvement.

- Iterative refinement: Continuously refine your voice assistant based on user feedback and performance data.

- Monitoring API usage and costs: Keep track of API usage to optimize costs and avoid unexpected expenses.

Advanced Features and Considerations for Voice Assistant Development with OpenAI

Building upon the basics, let's explore more advanced capabilities.

Personalized Experiences

Tailor the assistant to individual users:

- User profiling: Collect user data (with appropriate consent) to understand their preferences.

- Personalized recommendations: Offer tailored recommendations based on user profiles.

- Adaptive learning: Continuously improve the assistant's performance based on user interactions.

- Storing user data securely: Implement robust security measures to protect user privacy.

Integrating with External Services

Extend your voice assistant's capabilities:

- Smart home device control: Integrate with smart home platforms for voice-controlled automation.

- Calendar integration: Allow users to manage their schedules through voice commands.

- Weather updates: Provide real-time weather information.

- Online searches: Integrate with search engines for quick information retrieval.

Addressing Ethical Considerations and Bias Mitigation

Responsible AI development is paramount:

- Addressing potential biases in training data: Carefully curate training data to mitigate biases.

- Ensuring fairness and equity: Design your voice assistant to be fair and equitable to all users.

- User privacy considerations: Prioritize user privacy and data security.

- Responsible data handling: Adhere to data privacy regulations and ethical guidelines.

Conclusion

OpenAI's powerful tools are democratizing voice assistant development, enabling developers of all skill levels to build innovative and engaging applications. By leveraging the Whisper API for speech recognition, GPT models for natural language understanding, and text-to-speech APIs for realistic voice output, you can create voice assistants that are both intelligent and user-friendly. Start building your own voice assistant today using OpenAI's cutting-edge technology and explore the limitless possibilities of this exciting field! Learn more about developing voice assistants with OpenAI's new tools and unlock the future of voice interaction.

Featured Posts

-

Tornado Outbreak 25 Dead Widespread Destruction Across Central Us

May 19, 2025

Tornado Outbreak 25 Dead Widespread Destruction Across Central Us

May 19, 2025 -

Eurovision Song Contest 2025 Date And Location Announced

May 19, 2025

Eurovision Song Contest 2025 Date And Location Announced

May 19, 2025 -

Eurosong 10 Najlosijih Rezultata Hrvatske U Povijesti

May 19, 2025

Eurosong 10 Najlosijih Rezultata Hrvatske U Povijesti

May 19, 2025 -

Marko Bosnjak Put Do Eurosonga

May 19, 2025

Marko Bosnjak Put Do Eurosonga

May 19, 2025 -

Parcay Sur Vienne Succes De La Fete De La Marche Avec Une Centaine De Participants

May 19, 2025

Parcay Sur Vienne Succes De La Fete De La Marche Avec Une Centaine De Participants

May 19, 2025

Latest Posts

-

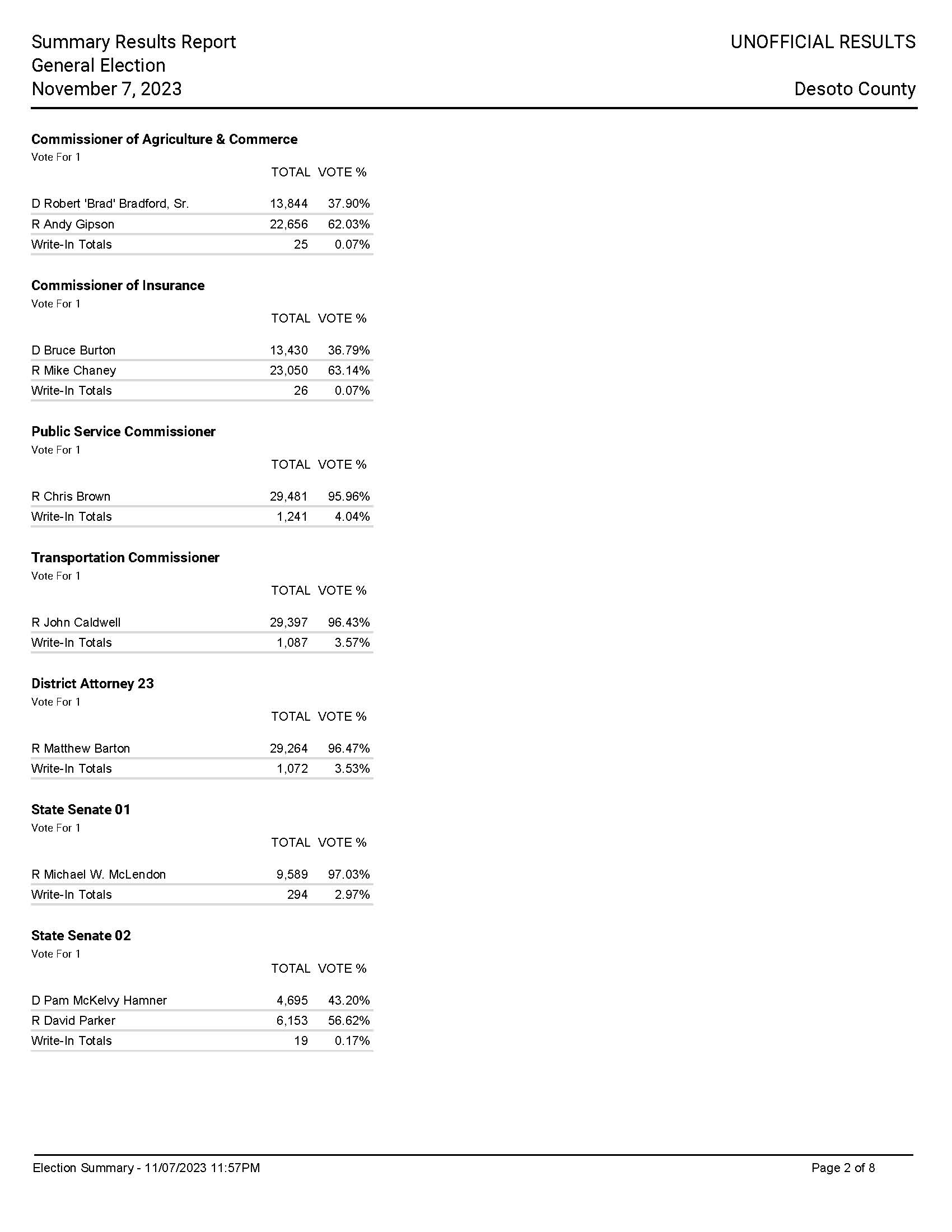

Understanding The Southaven Mayoral Election A Voters Guide For De Soto County

May 19, 2025

Understanding The Southaven Mayoral Election A Voters Guide For De Soto County

May 19, 2025 -

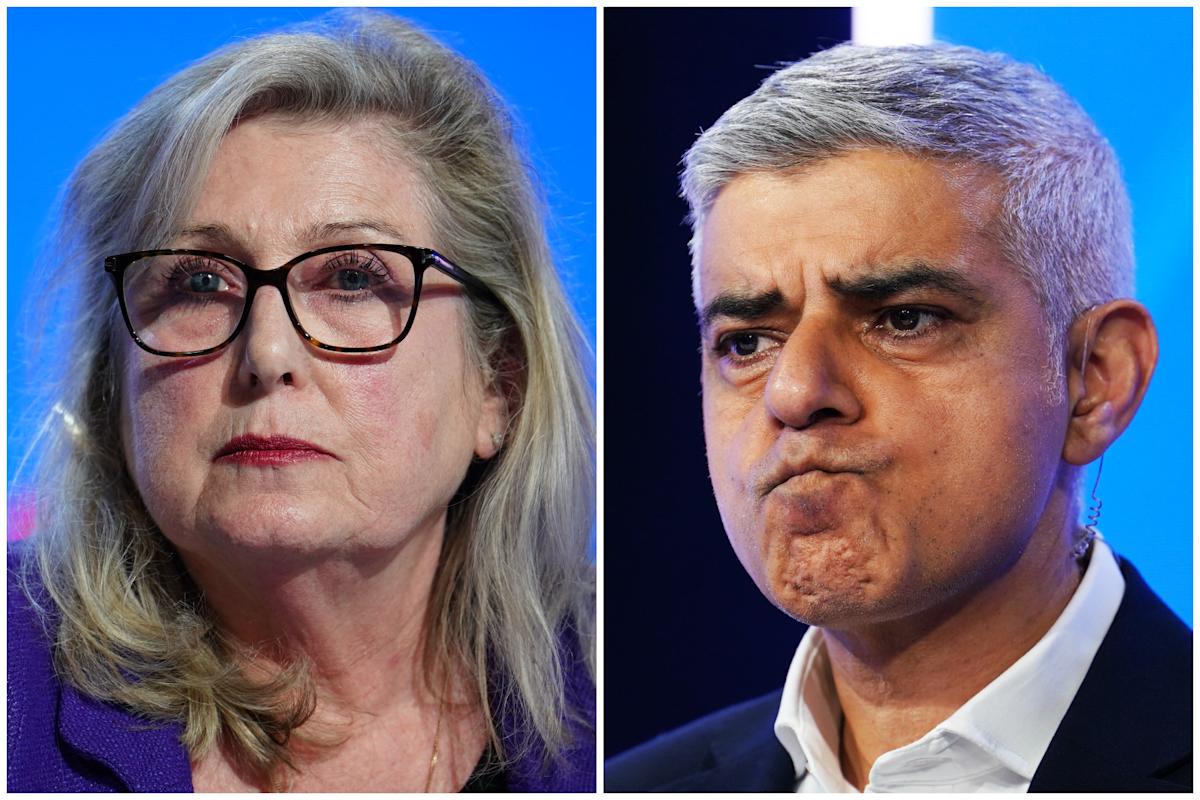

Who Will Lead Southaven De Soto Countys Mayoral Election

May 19, 2025

Who Will Lead Southaven De Soto Countys Mayoral Election

May 19, 2025 -

Southaven Mayoral Election 2024 Candidates And Key Issues

May 19, 2025

Southaven Mayoral Election 2024 Candidates And Key Issues

May 19, 2025 -

De Soto County Votes Key Issues In The Southaven Mayoral Election

May 19, 2025

De Soto County Votes Key Issues In The Southaven Mayoral Election

May 19, 2025 -

Southavens Mayoral Race A Guide For De Soto County Voters

May 19, 2025

Southavens Mayoral Race A Guide For De Soto County Voters

May 19, 2025