Mass Shooter Radicalization: Examining The Influence Of Algorithms

Table of Contents

The Role of Algorithmic Filtering and Echo Chambers

The architecture of many social media platforms is inherently biased towards engagement. This means algorithms prioritize content that keeps users scrolling, often at the expense of safety and truth. This leads to the formation of echo chambers, digital spaces where users are primarily exposed to information confirming their pre-existing beliefs.

Personalized Content Feeds

- Algorithms prioritizing engagement over safety: Social media platforms often reward sensational and emotionally charged content, regardless of its veracity or potential for harm. This creates a system where extremist viewpoints can easily gain traction.

- The filter bubble effect: Personalized feeds limit exposure to diverse perspectives, shielding users from opposing viewpoints and reinforcing existing biases. This isolation can accelerate radicalization.

- Reinforcement of radical beliefs through curated content: Algorithms curate content that aligns with a user's past activity, creating a feedback loop where increasingly extreme views are presented as the norm.

For example, YouTube's recommendation algorithm has been criticized for pushing users down rabbit holes of extremist content, leading them to increasingly radical videos and channels. Similarly, Facebook's targeted advertising capabilities allow extremist groups to reach specific demographics with tailored propaganda.

Targeted Advertising and Propaganda

Algorithms are not simply passive tools; they are actively used to target individuals with extremist ideologies. This targeted advertising delivers propaganda and recruitment materials directly to susceptible users, often bypassing traditional media gatekeepers.

- Techniques used in targeted advertising: Sophisticated profiling techniques allow extremist groups to identify individuals with specific interests, beliefs, and vulnerabilities.

- Ease of spreading extremist propaganda online: The anonymity and reach of the internet make it exceptionally easy to spread extremist propaganda and recruitment materials.

- Difficulty in detecting and removing such content: The sheer volume of online content makes it challenging for platforms to effectively monitor and remove extremist materials.

Extremist groups utilize micro-targeting to reach potential recruits based on their online activity, creating personalized messages designed to exploit vulnerabilities and encourage engagement with their ideologies.

The Spread of Misinformation and Conspiracy Theories

Algorithms play a significant role in the amplification of false narratives and conspiracy theories, creating a climate of distrust and fueling extremist ideologies. This misinformation, often related to mass shootings, can contribute to radicalization by fostering feelings of anger, resentment, and a sense of injustice.

The Amplification of False Narratives

- Examples of misinformation campaigns related to mass shootings: False narratives surrounding the motivations and backgrounds of mass shooters are often spread online, fueling existing biases and prejudices.

- The role of bots and automated accounts: Bots and automated accounts are used to artificially amplify extremist content and manipulate public opinion, creating a false sense of widespread support.

- The difficulty in fact-checking and debunking misinformation: Misinformation spreads rapidly online, often outpacing fact-checking efforts, making it difficult to counter harmful narratives.

The spread of misinformation often creates a sense of disillusionment and alienation, which can make individuals more susceptible to extremist ideologies.

Online Grooming and Radicalization

Algorithms facilitate online grooming by creating pathways for individuals to be gradually exposed to increasingly extreme content, leading to radicalization. This process often involves building trust and creating a sense of community before introducing more violent or hateful ideologies.

- The stages of online grooming: The process can be gradual, starting with seemingly innocuous content and progressively moving towards more extremist viewpoints.

- The use of online communities to build trust and recruit members: Online communities provide a space for extremist groups to build relationships, recruit members, and share their ideologies.

- The role of anonymity in online spaces: Anonymity online allows individuals to express hateful views without fear of immediate consequences, fostering a climate of intolerance and hate speech.

Examples include online forums and chat groups dedicated to extremist ideologies, where individuals are gradually radicalized through exposure to increasingly violent and hateful content.

The Challenges of Content Moderation and Regulation

Moderating and regulating online content is a monumental task. Social media companies face immense challenges in identifying and removing extremist material due to the sheer volume of data and the sophisticated tactics employed by extremist groups.

The Scale and Complexity of the Problem

- The limitations of automated content moderation: Automated systems can struggle to identify nuanced forms of hate speech and extremist content.

- The human cost of content moderation: Human moderators face immense psychological distress from exposure to violent and graphic content.

- The challenges of cross-platform coordination: The decentralized nature of the internet makes it difficult to coordinate efforts across different social media platforms.

The sheer scale of the problem necessitates a multi-pronged approach.

The Need for Policy Changes and Collaboration

Addressing the issue of "mass shooter radicalization" requires a collaborative effort between governments, social media companies, and researchers. This includes policy changes aimed at improving content moderation, increasing transparency, and fostering a more responsible online environment.

- The role of government regulation: Governments need to develop clear and effective regulations to address online extremism.

- The need for increased transparency from social media companies: Social media companies should be more transparent about their algorithms and content moderation policies.

- The importance of cross-disciplinary research: Further research is needed to understand the complex dynamics of online radicalization and to develop effective countermeasures.

Potential solutions include improved algorithms that prioritize safety over engagement, increased investment in content moderation, and collaborative efforts to identify and address harmful online narratives.

Conclusion

Algorithms significantly contribute to mass shooter radicalization by creating echo chambers, amplifying misinformation, and facilitating online grooming. The scale and complexity of the problem require a multi-faceted approach involving collaboration between governments, tech companies, and researchers. We must continue to examine the intricate relationship between algorithms and extremist ideologies to effectively combat this growing threat. To address the issue of mass shooter radicalization, we must support research into algorithmic bias and demand greater transparency and accountability from social media companies. Contact your elected officials and advocate for policies that promote a safer online environment. Ignoring the role of algorithms in mass shooter radicalization is no longer an option; proactive action is crucial to mitigate this dangerous phenomenon and protect our communities.

Featured Posts

-

Acquisition De Dren Bio Par Sanofi Un Nouvel Anticorps Bispecifique Pour Le Traitement Des Maladies Immunitaires

May 31, 2025

Acquisition De Dren Bio Par Sanofi Un Nouvel Anticorps Bispecifique Pour Le Traitement Des Maladies Immunitaires

May 31, 2025 -

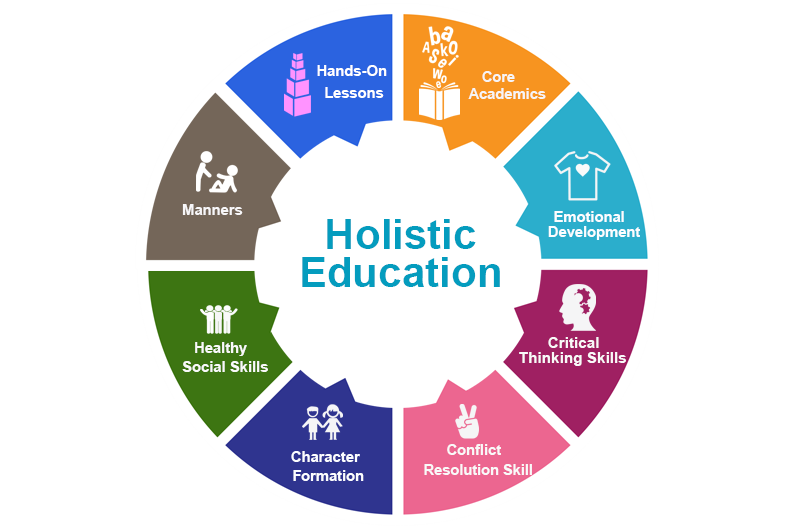

Understanding The Good Life A Holistic Perspective

May 31, 2025

Understanding The Good Life A Holistic Perspective

May 31, 2025 -

Alcaraz Joins Ruud In Barcelona Open Round Of 16

May 31, 2025

Alcaraz Joins Ruud In Barcelona Open Round Of 16

May 31, 2025 -

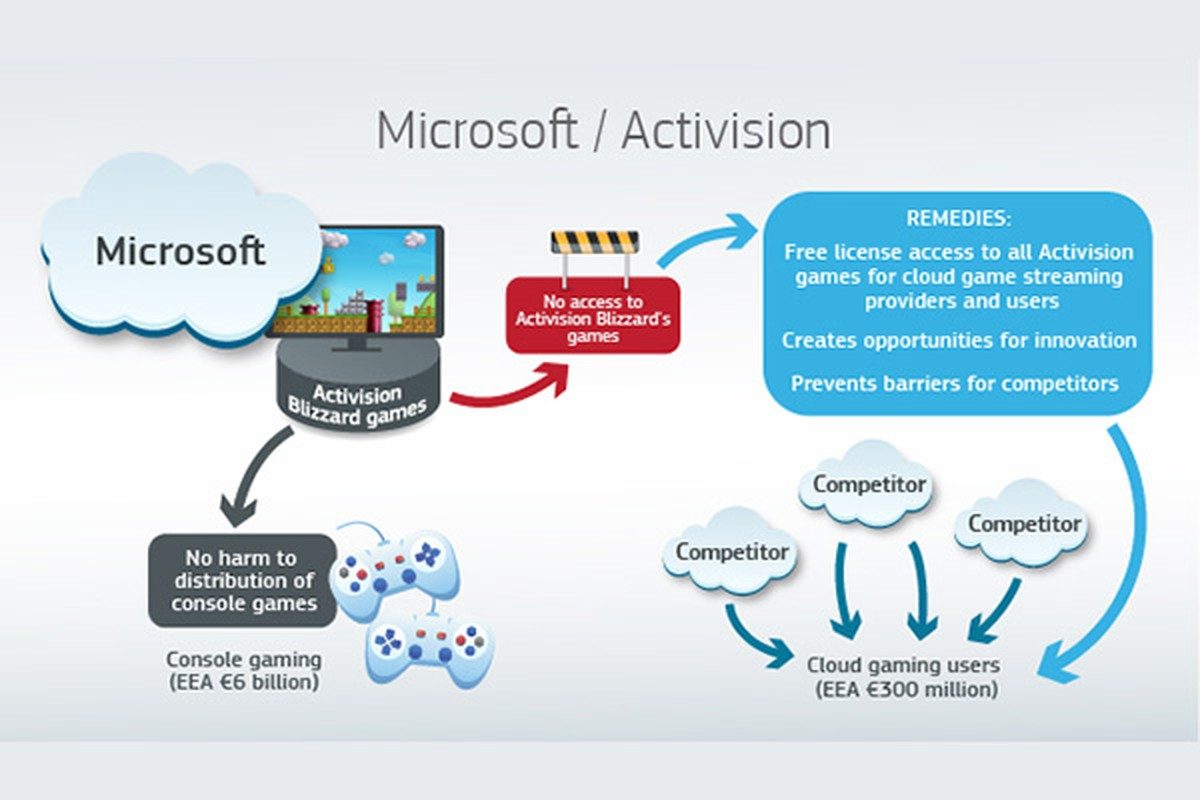

Future Of Gaming Uncertain Ftcs Appeal Against Microsoft Activision Merger

May 31, 2025

Future Of Gaming Uncertain Ftcs Appeal Against Microsoft Activision Merger

May 31, 2025 -

Minimalist Living A 30 Day Approach To Intentional Living

May 31, 2025

Minimalist Living A 30 Day Approach To Intentional Living

May 31, 2025