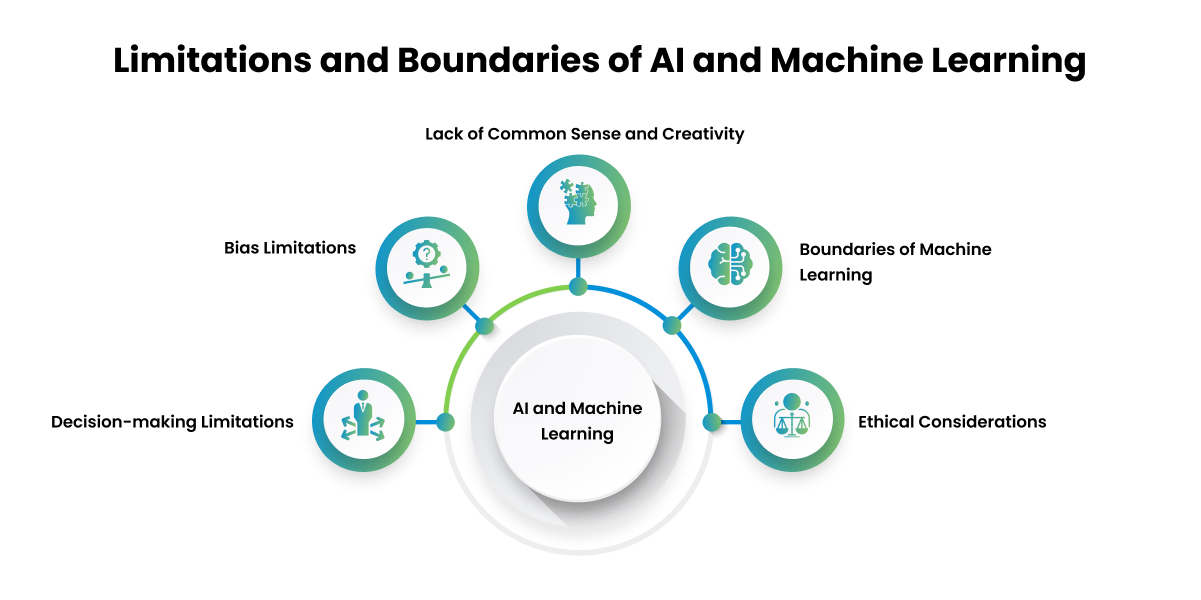

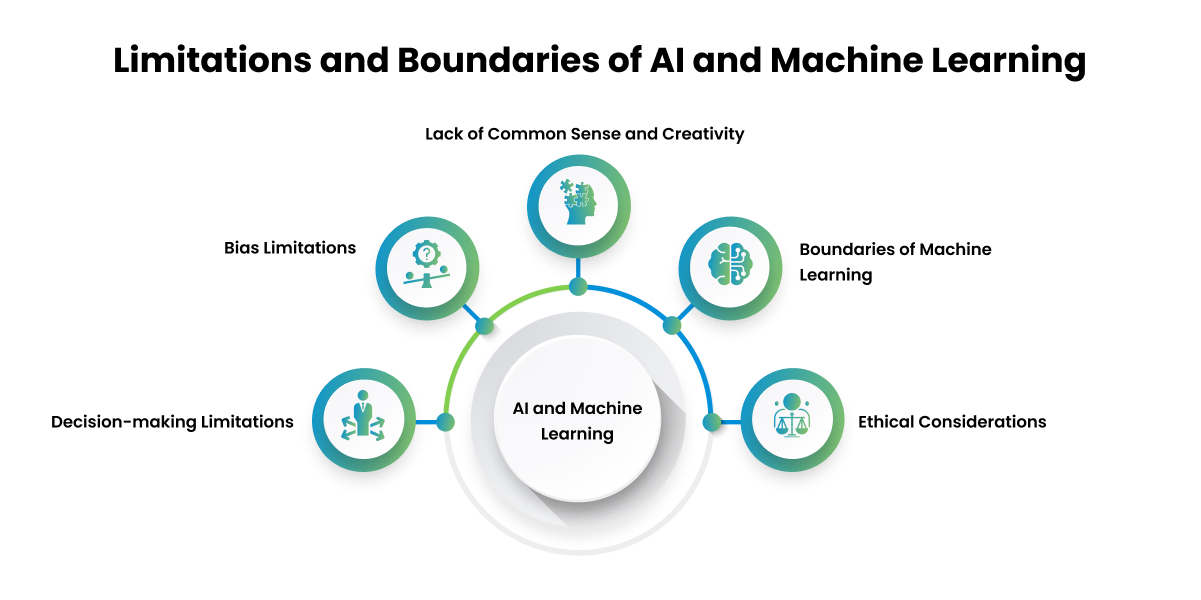

Responsible AI: Acknowledging The Limitations Of AI Learning

Table of Contents

Data Bias and its Impact on AI Outcomes

AI models are only as good as the data they are trained on. Biased data inevitably leads to biased AI models, resulting in unfair or discriminatory outcomes. This is a significant challenge in responsible AI. For example, facial recognition systems trained primarily on images of light-skinned individuals often perform poorly on darker skin tones, leading to misidentification and potential injustices. Similarly, loan application algorithms trained on historical data reflecting existing societal biases can perpetuate discriminatory lending practices.

- Insufficient or unrepresentative datasets: A lack of diversity in the data used to train AI models can lead to skewed results that disproportionately affect certain groups.

- Historical biases embedded in data sources: Data often reflects existing societal biases, perpetuating and even amplifying them within AI systems.

- Lack of diversity in data collection teams: The teams responsible for collecting and curating data also influence its representativeness. A lack of diversity in these teams can result in biases being overlooked.

- Techniques to mitigate data bias: Strategies such as data augmentation (creating synthetic data to balance representation) and the use of bias detection algorithms are crucial steps toward building more equitable AI systems. Careful data curation and rigorous testing are also vital.

The Limits of Generalization in AI Systems

AI models are typically trained on specific datasets and may struggle to generalize their learning to new, unseen data or different contexts. This limitation in generalization can manifest as overfitting or underfitting. Overfitting occurs when a model performs exceptionally well on the training data but poorly on new data, essentially memorizing the training set rather than learning underlying patterns. Underfitting, conversely, occurs when the model is too simplistic to capture the complexities of the data and performs poorly on both training and unseen data.

Consider an AI trained to identify cats in photographs taken under specific lighting conditions. This AI might fail to recognize cats in images with different lighting, backgrounds, or angles, illustrating the limitations of generalization.

- Overfitting: The model performs well on training data but poorly on unseen data. This is a frequent issue in machine learning requiring careful consideration of model complexity and regularization techniques.

- Underfitting: The model is too simplistic to capture the complexities of the data, leading to poor performance across datasets. Feature engineering and model selection play a critical role in avoiding this issue.

- Transfer learning: This technique leverages knowledge gained from training on one task to improve performance on a related task, helping to improve generalization capabilities.

- Importance of robust testing and validation procedures: Rigorous testing with diverse datasets is crucial to identify and mitigate the limitations of generalization.

Explainability and Transparency in AI Decision-Making

Many AI models, particularly deep learning models, function as "black boxes," making it difficult to understand the reasoning behind their decisions. This lack of transparency raises serious concerns, especially in high-stakes applications like healthcare and criminal justice. Explainable AI (XAI) aims to address this "black box" problem by making AI decision-making processes more understandable and interpretable. This is crucial for building trust and accountability in AI systems.

- Challenges in interpreting complex AI models: The intricate architecture of some AI models makes it challenging to trace the logic behind their conclusions.

- The need for transparency in AI algorithms and decision-making processes: Understanding how AI systems arrive at their decisions is essential for identifying and correcting errors and biases.

- Techniques for improving AI explainability: Methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help to interpret individual predictions.

- Ethical considerations surrounding the lack of transparency: The inability to understand AI decisions can have serious ethical implications, particularly when those decisions impact individuals' lives.

Addressing the Ethical Implications of AI Limitations

Acknowledging the limitations of AI learning necessitates a commitment to responsible AI practices. Developers, users, and policymakers all share the responsibility of mitigating the risks associated with these limitations. This involves promoting transparency, accountability, and fairness in AI systems.

- Accountability for AI-driven decisions: Establishing clear lines of accountability for the decisions made by AI systems is crucial.

- The role of ethical guidelines and frameworks in AI development: Robust ethical guidelines and regulatory frameworks are needed to guide the development and deployment of responsible AI.

- The importance of ongoing monitoring and evaluation of AI systems: Continuous monitoring is necessary to identify and address potential biases and limitations.

- The societal impact of unchecked AI development: Failing to address the limitations of AI can have significant societal consequences, exacerbating existing inequalities and undermining trust in technology.

Conclusion

The limitations of AI learning – data bias, generalization challenges, lack of explainability, and ethical implications – are significant hurdles in the pursuit of beneficial AI. By acknowledging these limitations, we can work toward building more robust, fair, and trustworthy AI systems. Understanding these challenges is the foundation for responsible AI development. By understanding the limitations of AI learning, we can work towards building a future powered by truly responsible AI. Learn more about ethical AI development and contribute to the conversation today!

Featured Posts

-

Emergency Response To Out Of Control Wildfires In Eastern Manitoba

May 31, 2025

Emergency Response To Out Of Control Wildfires In Eastern Manitoba

May 31, 2025 -

Receta Aragonesa 3 Ingredientes Viaje Al Siglo Xix

May 31, 2025

Receta Aragonesa 3 Ingredientes Viaje Al Siglo Xix

May 31, 2025 -

A Plastic Glove Project Fostering Collaboration Between The Rcn And Vet Nursing Professionals

May 31, 2025

A Plastic Glove Project Fostering Collaboration Between The Rcn And Vet Nursing Professionals

May 31, 2025 -

Umzug Ins Gruene Deutsche Gemeinde Lockt Mit Kostenlosen Wohnungen

May 31, 2025

Umzug Ins Gruene Deutsche Gemeinde Lockt Mit Kostenlosen Wohnungen

May 31, 2025 -

Detroit Tigers Begin Minnesota Twins Road Trip Friday

May 31, 2025

Detroit Tigers Begin Minnesota Twins Road Trip Friday

May 31, 2025