The Ethics Of AI Therapy: Surveillance And The Erosion Of Privacy

Table of Contents

H2: Data Collection and Algorithmic Bias in AI Therapy

The promise of AI in therapy hinges on the collection and analysis of vast amounts of personal data. Understanding the extent of this data collection is crucial to evaluating the ethical implications.

H3: The Extent of Data Collection

AI therapy apps collect a wide array of sensitive information, including:

- Voice recordings: Capturing the nuances of tone and inflection in a patient's speech.

- Text messages: Analyzing the content and style of written communication.

- Behavioral patterns: Tracking engagement, response times, and other usage metrics.

- Biometric data: Some apps may even collect physiological data like heart rate variability.

This sheer volume of personal data raises significant concerns about potential misuse, especially given the lack of transparency surrounding how this information is used, stored, and protected. The potential for data breaches and unauthorized access is a serious ethical and legal concern.

H3: Algorithmic Bias and its Impact

Algorithms used in AI therapy are trained on datasets, and if these datasets reflect existing societal biases (e.g., racial, gender, socioeconomic), the algorithms themselves will perpetuate and even amplify these biases. This can lead to:

- Inaccurate diagnoses: Algorithms may misinterpret symptoms or behaviors based on pre-existing biases in the training data.

- Inequitable treatment recommendations: Patients from marginalized groups may receive less effective or appropriate care.

- Reinforcement of harmful stereotypes: The algorithm’s output may reinforce negative perceptions or assumptions about certain patient populations.

Mitigating algorithmic bias requires the use of diverse and representative datasets during the training process, coupled with rigorous testing and validation to ensure fairness and accuracy.

H2: Surveillance and the Loss of Confidentiality

The constant monitoring inherent in AI therapy raises profound concerns about surveillance and the erosion of the therapeutic relationship, which fundamentally relies on trust and confidentiality.

H3: The Nature of AI Surveillance

AI therapy apps, by their very nature, engage in a form of constant surveillance. This raises several crucial questions:

- Where is the data stored, and who has access to it?

- What safeguards are in place to prevent unauthorized access or breaches?

- How does this constant monitoring affect a patient's willingness to be vulnerable and self-disclose?

The potential for misuse and the psychological impact of feeling constantly monitored can significantly undermine the therapeutic process and damage the patient-therapist relationship.

H3: Legal and Ethical Frameworks

Existing legal and ethical frameworks, such as HIPAA in the US and GDPR in Europe, aim to protect patient privacy and confidentiality. However, these regulations may not adequately address the unique challenges posed by AI therapy:

- HIPAA's applicability to AI-generated data is still evolving and often unclear.

- GDPR, while robust, faces challenges in enforcing data protection across international borders, especially when data is processed by multiple entities.

- The rapid pace of technological development outstrips the ability of legislation to keep up.

These gaps in existing legislation highlight the urgent need for updated and more comprehensive regulatory frameworks.

H2: Mitigating the Risks: Towards Ethical AI Therapy

Addressing the ethical concerns surrounding AI therapy requires a multi-pronged approach.

H3: Data Minimization and Anonymization

Reducing the amount of data collected and protecting patient identities is paramount. Techniques include:

- Differential privacy: Adding noise to the data to protect individual identities while preserving aggregate trends.

- Federated learning: Training algorithms on decentralized data, minimizing the need to centralize sensitive information.

- Data encryption and secure storage: Implementing robust security measures to protect data from unauthorized access.

H3: Transparency and Informed Consent

Transparency regarding data usage and obtaining meaningful informed consent from patients is essential. This includes:

- Clear and easily understandable privacy policies.

- Opportunities for patients to review and understand what data is being collected and how it’s used.

- Mechanisms for patients to access and control their own data.

H3: Regulatory Oversight and Accountability

Stronger regulations and industry standards are needed to ensure responsible AI development and implementation. This requires:

- Independent audits and assessments of AI therapy systems for bias and security vulnerabilities.

- Clear mechanisms for redress and accountability in cases of privacy violations.

- International collaborations to establish shared ethical guidelines.

3. Conclusion

The ethics of AI therapy, particularly the potential for surveillance and privacy erosion, necessitate careful consideration. Data collection practices, algorithmic biases, and the lack of robust regulations pose significant risks to patient well-being and the integrity of the therapeutic relationship. However, by prioritizing data minimization, ensuring transparency and informed consent, and implementing strong regulatory oversight, we can move towards a future where responsible AI therapy enhances, rather than undermines, the therapeutic process. Let's engage in an open dialogue to ensure ethical AI healthcare becomes a reality, prioritizing patient privacy and well-being above all else. The future of responsible AI therapy depends on our collective commitment to these principles.

Featured Posts

-

Q2

May 16, 2025

Q2

May 16, 2025 -

Proyek Tembok Laut Raksasa Analisis Peran Ahy Dalam Keterlibatan China

May 16, 2025

Proyek Tembok Laut Raksasa Analisis Peran Ahy Dalam Keterlibatan China

May 16, 2025 -

Ge Force Now 21 Nouveaux Jeux Integrent Le Cloud Ce Mois

May 16, 2025

Ge Force Now 21 Nouveaux Jeux Integrent Le Cloud Ce Mois

May 16, 2025 -

Cassie Venturas Testimony Diddy Sex Trafficking Trial Details

May 16, 2025

Cassie Venturas Testimony Diddy Sex Trafficking Trial Details

May 16, 2025 -

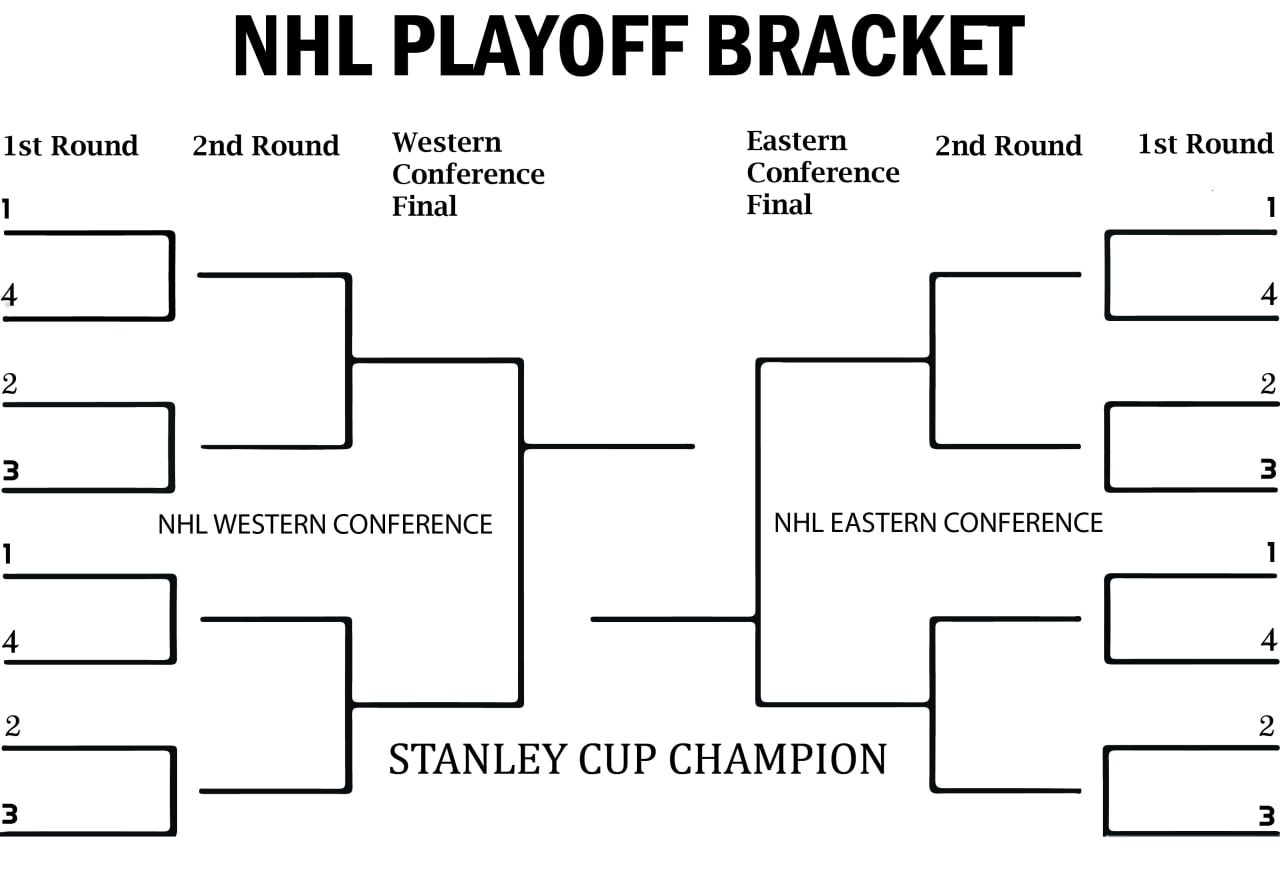

Nba And Nhl Playoffs Best Bets For Round 2

May 16, 2025

Nba And Nhl Playoffs Best Bets For Round 2

May 16, 2025