The First Amendment And AI Chatbots: Character AI Faces Legal Scrutiny

Table of Contents

Character AI's Functionality and Potential for First Amendment Conflicts

Character AI generates text that mimics human conversation with remarkable fluency. This seemingly innocuous function, however, opens the door to a wide range of potential First Amendment conflicts. The platform's capacity for generating vast quantities of text, tailored to individual user prompts, makes it susceptible to the creation of offensive, harmful, or even illegal content.

- Examples of potentially problematic content: Users could potentially generate hate speech targeting specific groups, incite violence through persuasive narratives, or create materials that infringe on copyright laws. The sheer volume of potential interactions makes exhaustive monitoring almost impossible.

- User input and platform responsibility: While Character AI itself doesn't "think" or hold beliefs, the platform's output is entirely dependent on user input. This raises critical questions regarding the platform's responsibility for the content generated by its users. Is Character AI merely a tool, similar to a printing press, or does it bear some responsibility for the content it facilitates?

- Real-time content moderation challenges: The dynamic nature of conversations within Character AI makes real-time moderation extremely difficult. The speed at which content is generated necessitates sophisticated AI-powered moderation systems, which themselves are prone to error and bias.

Section 230 and its Relevance to AI Chatbots like Character AI

Section 230 of the Communications Decency Act provides significant legal protection to online platforms, shielding them from liability for user-generated content. However, its applicability to AI-generated content, particularly that produced by sophisticated platforms like Character AI, is hotly debated.

- Arguments for applying Section 230: Proponents argue that Character AI acts as a neutral technology provider, similar to other online platforms. They contend that holding the platform liable for AI-generated content would stifle innovation and unduly burden developers.

- Arguments against applying Section 230: Critics argue that the AI's role in shaping and amplifying user input goes beyond that of a passive platform. The AI's algorithms actively contribute to the creation of the content, blurring the lines between user-generated content and platform-generated content. This calls into question the extent to which Section 230 protection applies.

- Potential legal challenges: The ambiguous legal landscape surrounding Section 230 and AI-generated content creates significant legal risks for Character AI. Lawsuits alleging negligence or complicity in the dissemination of harmful content are very plausible.

The First Amendment and the Issue of Algorithmic Bias

Algorithmic bias in AI chatbots poses a significant threat to freedom of speech. If the algorithms used by Character AI reflect existing societal biases, the resulting outputs could be discriminatory or unfair, disproportionately affecting certain groups.

- Examples of potential bias: Character AI's responses might perpetuate harmful stereotypes based on race, gender, religion, or other demographic factors, thereby limiting or distorting the free expression of those groups.

- Developer responsibility for bias mitigation: Developers have a critical responsibility to actively mitigate algorithmic bias. This requires careful curation of training data, rigorous testing for bias, and ongoing monitoring of the platform's output.

- Legal implications of biased outputs: Biased AI outputs could lead to legal challenges and lawsuits alleging discrimination or violation of civil rights.

Future Legal Landscape and Implications for AI Development

The legal landscape surrounding AI chatbots like Character AI is still evolving. The future will likely see increased legal challenges testing the boundaries of freedom of speech in the context of AI-generated content.

- Need for clearer legal frameworks: A more precise legal framework is needed to address the unique challenges posed by AI-generated content. Clear guidelines are required to determine platform responsibility and establish standards for content moderation.

- The role of self-regulation and industry best practices: Self-regulation within the AI industry, coupled with the development of robust industry best practices, will be crucial in addressing the ethical and legal implications of AI chatbots.

- Potential for government regulation: Government regulation of AI chatbots and content moderation remains a possibility, especially if self-regulation proves inadequate.

Conclusion: The First Amendment, AI Chatbots, and the Path Forward for Character AI

Character AI faces significant challenges in balancing freedom of speech with the imperative to prevent harm. The application of Section 230, the mitigation of algorithmic bias, and the evolving legal interpretations of freedom of speech in the digital age all contribute to a complex and uncertain legal landscape. The interplay between The First Amendment and AI chatbots necessitates ongoing discussion, careful consideration, and a proactive approach to responsible AI development. We encourage readers to delve deeper into this vital discussion surrounding AI chatbots and the First Amendment, to learn more about the implications of AI technology and its interaction with our fundamental rights. Further research into the ongoing legal debates and the development of effective content moderation strategies is crucial for navigating this evolving terrain.

Featured Posts

-

Intikamci Burclar Ihanet Edildiginde Hemen Tepki Verenler

May 23, 2025

Intikamci Burclar Ihanet Edildiginde Hemen Tepki Verenler

May 23, 2025 -

This Mornings Cat Deeley Suffers Wardrobe Malfunction Minutes Before Live Show

May 23, 2025

This Mornings Cat Deeley Suffers Wardrobe Malfunction Minutes Before Live Show

May 23, 2025 -

Todays Update Dylan Dreyers Son Post Operation

May 23, 2025

Todays Update Dylan Dreyers Son Post Operation

May 23, 2025 -

Pryamaya Translyatsiya Rybakina Protiv Eks Tretey Raketki Mira V Turnire Na 4 Milliarda

May 23, 2025

Pryamaya Translyatsiya Rybakina Protiv Eks Tretey Raketki Mira V Turnire Na 4 Milliarda

May 23, 2025 -

Andrew Tate Dubai O Retorno E A Promessa De Mais Videos De Direcao Imprudente

May 23, 2025

Andrew Tate Dubai O Retorno E A Promessa De Mais Videos De Direcao Imprudente

May 23, 2025

Latest Posts

-

2025 Memorial Day Sales Expert Selected Deals And Discounts

May 23, 2025

2025 Memorial Day Sales Expert Selected Deals And Discounts

May 23, 2025 -

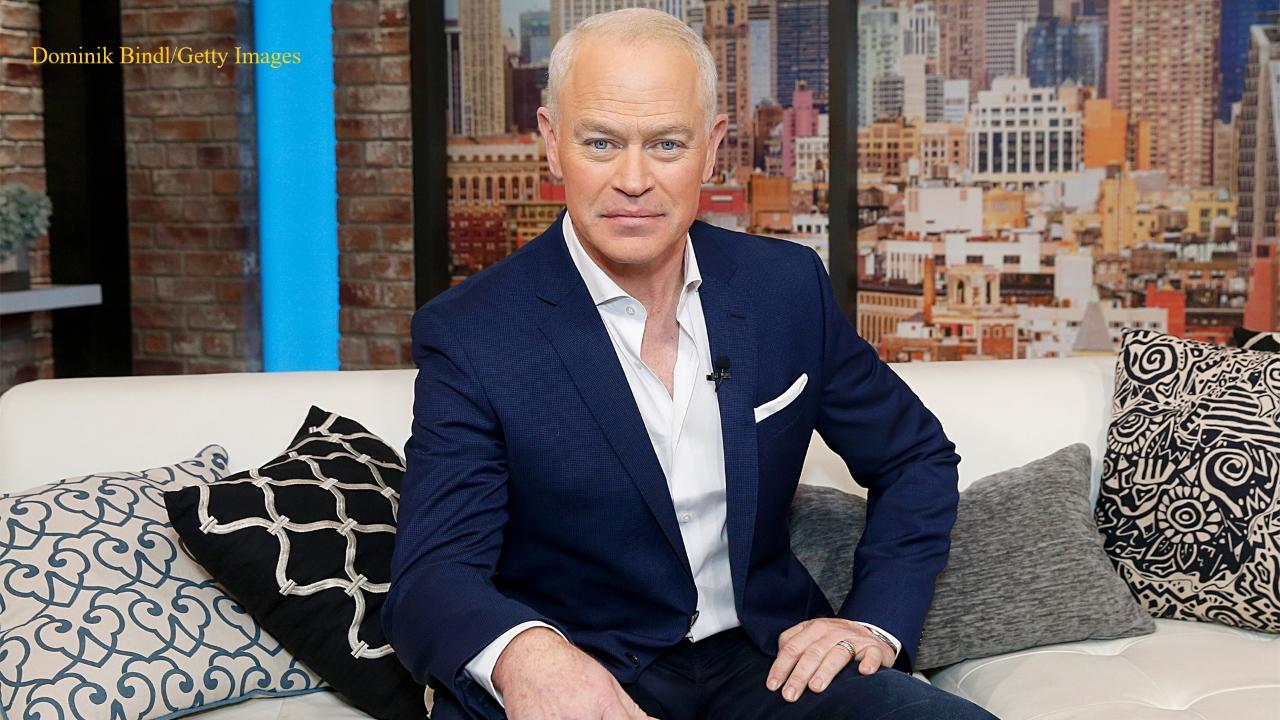

Behind The Scenes Neal Mc Donoughs Preparation For Bull Riding Video

May 23, 2025

Behind The Scenes Neal Mc Donoughs Preparation For Bull Riding Video

May 23, 2025 -

The Last Rodeo An Interview With Neal Mc Donough On Faith Film And Facing Challenges

May 23, 2025

The Last Rodeo An Interview With Neal Mc Donough On Faith Film And Facing Challenges

May 23, 2025 -

Tulsa King Season 3 Will Neal Mc Donough Be Back Sylvester Stallones New Look And Latest News

May 23, 2025

Tulsa King Season 3 Will Neal Mc Donough Be Back Sylvester Stallones New Look And Latest News

May 23, 2025 -

Florida Store Hours Memorial Day 2025 Publix Winn Dixie And More

May 23, 2025

Florida Store Hours Memorial Day 2025 Publix Winn Dixie And More

May 23, 2025