The Reality Of AI Learning: Navigating The Challenges Of Responsible AI

Table of Contents

Data Bias and Fairness in AI Learning

The promise of AI hinges on its ability to make unbiased decisions. However, the reality is that AI systems are only as good as the data they are trained on. The problem of AI bias arises when biased training data leads to discriminatory outcomes. This is not simply a theoretical concern; it has real-world implications.

The Problem of Biased Datasets

Consider facial recognition systems that struggle to identify individuals with darker skin tones, or loan applications algorithms that systematically disadvantage certain demographic groups. These are not isolated incidents but rather symptoms of a deeper problem: biased datasets.

- Sources of bias in data collection and annotation: Bias can creep in at various stages, from the initial data collection methods to the way data is labeled and annotated. For example, a dataset of images primarily featuring light-skinned individuals will inevitably lead to a system that performs poorly on darker skin tones. Similarly, biased annotation practices can reinforce existing societal biases.

- Techniques for detecting and mitigating bias: Addressing algorithmic bias requires proactive measures. Techniques like data augmentation (increasing the representation of underrepresented groups) and algorithmic fairness (developing algorithms that explicitly account for fairness constraints) are crucial.

- The importance of diverse and representative datasets: The key to mitigating bias is to ensure that training data is diverse and representative of the population the AI system will interact with. This requires careful planning, data collection strategies, and ongoing monitoring for bias. Keywords like "fairness in AI" and "data diversity" are crucial in this context.

Transparency and Explainability in AI Models

Many modern AI models, particularly deep learning models, are often referred to as "black boxes." This lack of AI transparency presents significant challenges for Responsible AI.

The "Black Box" Problem

The complexity of these models makes it difficult, if not impossible, to understand how they arrive at their decisions. This opacity is problematic, particularly in high-stakes applications.

- The limitations of opaque AI systems in high-stakes applications: In healthcare, for instance, an opaque AI system recommending a treatment without providing a clear rationale could be unacceptable. Similarly, in the criminal justice system, AI-driven sentencing should not operate without transparent and justifiable decision-making processes.

- Techniques for improving model explainability: Fortunately, techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are being developed to improve model explainability. These methods help to shed light on the internal workings of complex models, making them more interpretable.

- The importance of interpretable AI for building trust and accountability: Explainable AI (XAI) is not just a technical challenge; it's essential for building trust and accountability. Users need to understand how an AI system arrives at its decisions to accept its recommendations and hold developers accountable for any biases or errors. The keywords "interpretable AI" and "AI transparency" are vital in this discussion.

Privacy and Security Concerns in AI Systems

The increasing use of AI systems raises significant concerns about AI privacy and data security in AI. AI often relies on vast amounts of data, much of it personal and sensitive.

Protecting Sensitive Data

This data is vulnerable to breaches, misuse, and unauthorized access. The potential consequences can be severe, ranging from identity theft to discrimination.

- Data privacy regulations: Regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) aim to protect individual privacy. Adherence to these regulations is crucial for Responsible AI.

- Techniques for data anonymization and differential privacy: Techniques like data anonymization and differential privacy can help to protect sensitive information while still allowing for the use of data in AI systems.

- The importance of robust security measures to prevent unauthorized access: Robust security protocols are paramount to prevent data breaches and protect user privacy. This includes secure storage, encryption, and access controls. The term "responsible data handling" summarizes this section's key message.

Accountability and Ethical Frameworks for AI

The development and deployment of AI systems require clear guidelines and regulations. Without them, the potential for harm is significant.

Establishing Clear Responsibilities

This necessitates establishing clear responsibilities and mechanisms for accountability.

- The role of ethical guidelines and principles in AI development: Ethical guidelines and principles, such as those proposed by organizations like the AI Now Institute and the Partnership on AI, offer valuable frameworks for responsible AI development.

- The importance of human oversight and control in AI systems: Human oversight is crucial to ensure that AI systems are used responsibly and ethically. AI should augment human capabilities, not replace human judgment entirely.

- Mechanisms for accountability in case of AI-related harm: Clear mechanisms for accountability are necessary to address any harm caused by AI systems. This includes establishing processes for investigating incidents, assigning responsibility, and providing redress. Keywords like "AI ethics," "AI governance," and "AI accountability" are essential in this context.

Conclusion

The challenges of developing Responsible AI are multifaceted and interconnected. Data bias, lack of transparency, privacy concerns, and the absence of robust ethical frameworks all pose significant hurdles. However, by acknowledging and addressing these crucial challenges, we can harness the transformative power of AI while mitigating its risks. The future of AI hinges on our commitment to Responsible AI. By understanding and addressing these crucial challenges, we can harness the transformative power of AI while mitigating its risks and building a more equitable and just society. Learn more about developing Responsible AI practices today!

Featured Posts

-

Company Liability For Algorithmic Radicalization Leading To Mass Shootings

May 31, 2025

Company Liability For Algorithmic Radicalization Leading To Mass Shootings

May 31, 2025 -

Remembering Bernard Kerik Nycs Post 9 11 Leadership

May 31, 2025

Remembering Bernard Kerik Nycs Post 9 11 Leadership

May 31, 2025 -

Dragon Den Star Sues Competitor Over Stolen Puppy Toilet Invention

May 31, 2025

Dragon Den Star Sues Competitor Over Stolen Puppy Toilet Invention

May 31, 2025 -

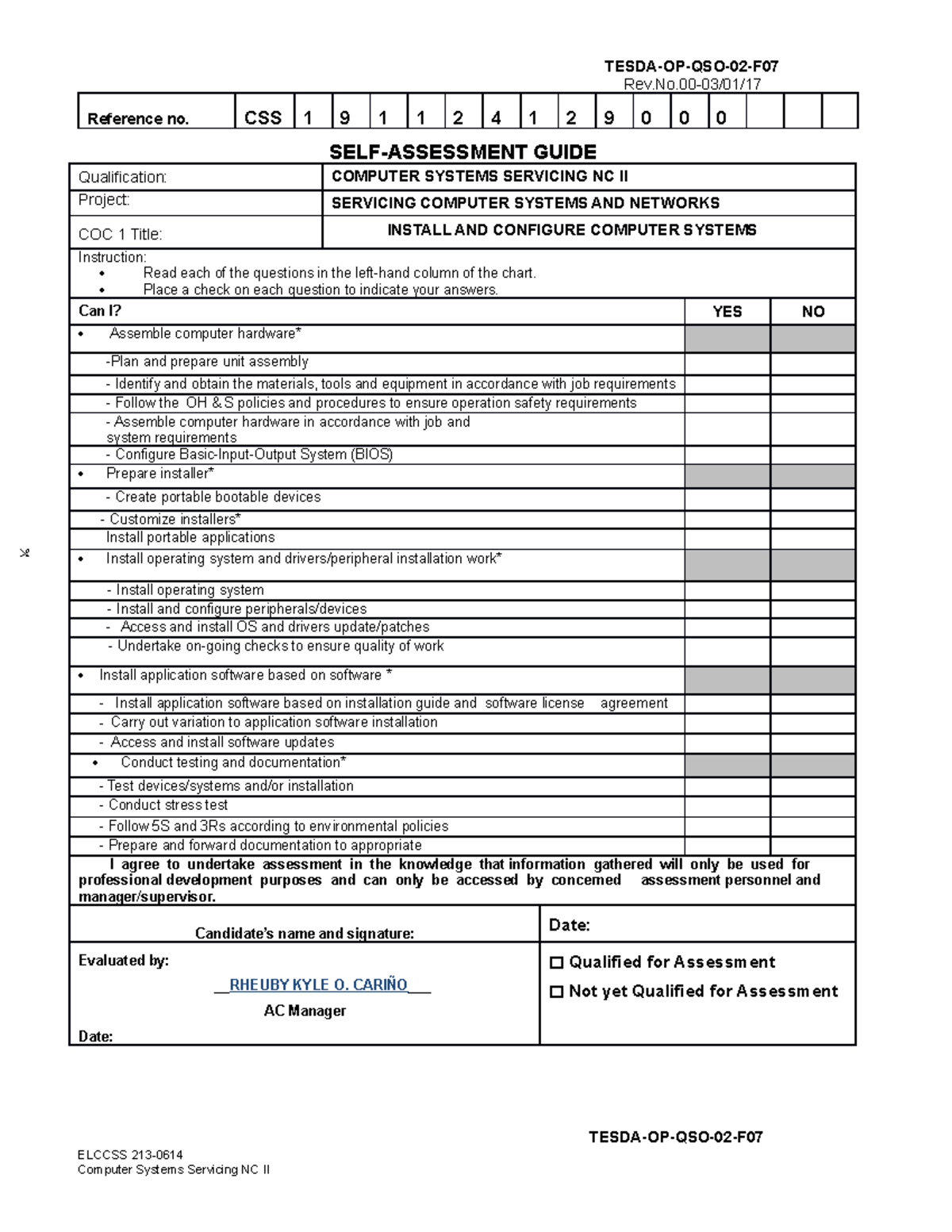

Is This The Good Life For You A Self Assessment Guide

May 31, 2025

Is This The Good Life For You A Self Assessment Guide

May 31, 2025 -

Donate And Bid 2025 Love Moto Stop Cancer Online Auction

May 31, 2025

Donate And Bid 2025 Love Moto Stop Cancer Online Auction

May 31, 2025