Understanding AI's Limitations: Moving Towards Responsible AI Development

Table of Contents

Bias and Fairness in AI Systems

AI systems, powerful as they are, are not immune to the biases present in the data they are trained on. Algorithmic bias, a significant concern in responsible AI development, manifests when an AI system produces unfair or discriminatory outcomes due to biased training data. This bias reflects and even amplifies existing societal inequalities.

For example, facial recognition systems have demonstrated higher error rates for individuals with darker skin tones, reflecting the underrepresentation of these groups in the training datasets. Similarly, AI-powered loan applications may disproportionately deny loans to certain demographic groups due to biases embedded in historical loan data.

Mitigating AI bias requires a multi-pronged approach:

- Data Augmentation: Increasing the diversity and representativeness of training datasets is paramount. This involves actively seeking out and incorporating data from underrepresented groups.

- Algorithmic Fairness Techniques: Employing algorithms specifically designed to mitigate bias, such as fairness-aware machine learning models, is crucial.

- Diverse Development Teams: Building AI systems requires diverse teams that can identify and address potential biases throughout the development lifecycle. A lack of diverse perspectives can lead to overlooked biases.

Addressing AI bias is not merely a technical challenge; it's a fundamental aspect of ethical AI and responsible AI development. The need for transparent and explainable AI models further strengthens efforts to identify and correct biases.

Data Dependency and Limitations

AI's power is intrinsically linked to its dependence on vast amounts of high-quality data. However, access to such data is often limited, costly, and fraught with challenges. This data dependency presents significant limitations:

- Data Scarcity: Many real-world problems lack sufficient data for training effective AI models.

- Data Quality: Inaccurate, incomplete, or inconsistent data can lead to unreliable and biased AI systems.

- Data Accessibility: Accessing relevant data can be restricted by privacy regulations, data ownership issues, or simply the cost of acquisition and cleaning.

- Data Privacy and Security: The use of personal data in AI raises significant privacy and security concerns. Data breaches can have severe consequences.

Supervised learning, a dominant approach in AI, relies heavily on labeled datasets. This approach struggles with the inherent complexities of real-world data. Addressing these data limitations requires exploring alternative approaches, including:

- Data Augmentation Techniques: Creating synthetic data to supplement real-world datasets.

- Unsupervised and Semi-supervised Learning: Developing models that can learn from unlabeled or partially labeled data.

Explainability and Interpretability Challenges

Many sophisticated AI models, particularly deep learning models, operate as "black boxes." Understanding how these complex models arrive at their decisions can be incredibly difficult, raising significant concerns regarding accountability and trust. This lack of transparency is a major obstacle in responsible AI development.

Explainable AI (XAI) focuses on developing more transparent and interpretable AI systems. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) aim to provide insights into model decision-making. However, these techniques are not a panacea and further research is needed.

The importance of XAI is multifaceted:

- Debugging and Error Identification: Understanding model decisions is critical for identifying and correcting errors.

- Building Trust and Acceptance: Transparency fosters trust among users and stakeholders.

- Regulatory Compliance: Increasingly, regulations require explainability in high-stakes applications of AI.

The Limits of Current AI Capabilities

While AI has achieved remarkable feats, current AI systems are still far from possessing human-level intelligence. They lack crucial capabilities:

- Common Sense Reasoning: AI struggles with tasks requiring common sense understanding of the world.

- Creativity and Innovation: While AI can generate creative outputs, it often lacks genuine originality and innovative thinking.

- Emotional Intelligence: AI systems typically lack the ability to understand and respond to human emotions.

The distinction between narrow AI (designed for specific tasks) and general AI (hypothetical AI with human-level intelligence) highlights the significant gap between current capabilities and the aspirational goal of artificial general intelligence (AGI).

Embracing Responsible AI Development

The limitations discussed above underscore the crucial need for a responsible approach to AI development. Addressing bias, data limitations, and explainability challenges is not merely desirable; it is essential for ensuring that AI benefits humanity. Understanding AI's limitations is crucial for responsible AI development. Let's work together towards responsible AI, building systems that are ethical, fair, and beneficial for all.

To learn more about responsible AI development, explore resources from organizations such as the AI Now Institute, the Partnership on AI, and OpenAI. Join the movement for responsible AI development and help shape a future where AI serves humanity ethically and effectively.

Featured Posts

-

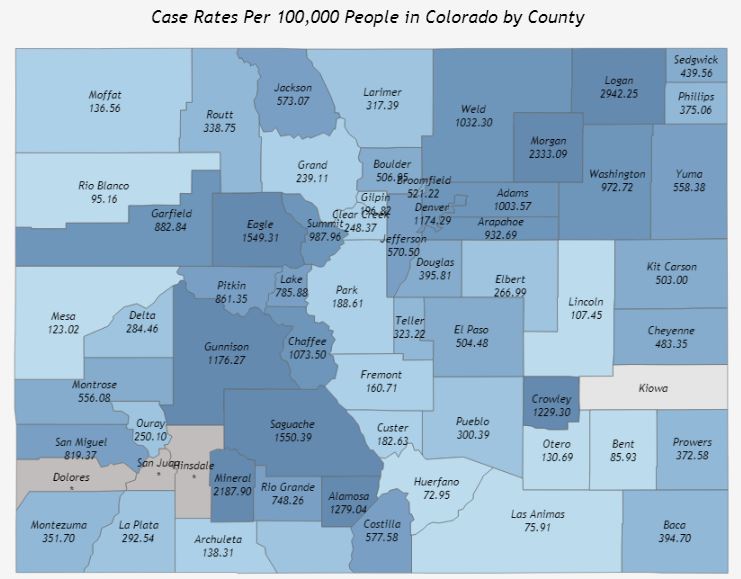

Covid 19 Variant Surge Increased Cases Reported Nationally

May 31, 2025

Covid 19 Variant Surge Increased Cases Reported Nationally

May 31, 2025 -

Selena Gomez Dan Miley Cyrus Perseteruan Berakhir Siap Untuk Kencan Ganda

May 31, 2025

Selena Gomez Dan Miley Cyrus Perseteruan Berakhir Siap Untuk Kencan Ganda

May 31, 2025 -

Assessing The Economic Vulnerability Of Us Colleges To Changes In Chinese Student Numbers

May 31, 2025

Assessing The Economic Vulnerability Of Us Colleges To Changes In Chinese Student Numbers

May 31, 2025 -

Princes Death High Fentanyl Levels Revealed On March 26th

May 31, 2025

Princes Death High Fentanyl Levels Revealed On March 26th

May 31, 2025 -

Alberta Wildfires A Looming Threat To Oil Production

May 31, 2025

Alberta Wildfires A Looming Threat To Oil Production

May 31, 2025