Building Voice Assistants Made Easy: OpenAI's 2024 Developer Tools

Table of Contents

Simplified Natural Language Understanding (NLU) with OpenAI's APIs

OpenAI's advanced Natural Language Understanding (NLU) APIs dramatically simplify the most challenging aspect of voice assistant development: interpreting user commands. Traditional NLU often requires extensive linguistic expertise and complex rule-based systems. OpenAI's approach uses powerful pre-trained models, significantly reducing development time and effort. This means you can focus on the user experience rather than getting bogged down in intricate grammar rules and parsing algorithms.

- Pre-trained models for rapid prototyping: Jumpstart your project with readily available models capable of understanding a wide range of voice commands and intents. These models provide a solid foundation that you can customize further.

- Customizable models for tailored voice interactions: Fine-tune pre-trained models or train your own to precisely match the specific vocabulary and phrasing used in your application. This ensures accurate interpretation of user requests within your voice assistant's domain.

- Reduced reliance on complex grammar rules and parsing: OpenAI's APIs handle the complexities of natural language processing, abstracting away the need for in-depth grammatical knowledge. This makes voice assistant development more accessible to a broader range of developers.

- Examples of APIs used for NLU in voice assistants (e.g., GPT-4, Whisper): OpenAI's GPT-4 and Whisper APIs are particularly well-suited for NLU tasks. GPT-4 excels at understanding context and intent, while Whisper provides robust speech-to-text capabilities, feeding the NLU system with clean, accurate transcriptions.

- Cost-effectiveness compared to traditional NLU solutions: OpenAI's pay-as-you-go pricing model is often significantly more cost-effective than maintaining and scaling traditional NLU infrastructure. This makes voice assistant development financially viable for smaller projects and startups.

Effortless Speech-to-Text and Text-to-Speech Conversion

Converting spoken words into text and vice versa is crucial for any voice assistant. OpenAI offers powerful and accurate speech-to-text and text-to-speech (TTS) capabilities, making this process seamless and efficient. The high accuracy and natural-sounding voices significantly enhance the user experience.

- Whisper API for accurate and efficient speech-to-text conversion: The Whisper API is a game-changer, providing high-quality speech-to-text transcriptions across multiple languages and accents. Its robustness minimizes errors, ensuring your voice assistant understands user input correctly.

- Integration with various TTS engines for a smooth user experience: OpenAI's tools integrate well with various TTS engines, allowing you to choose the voice that best suits your application's branding and target audience. You can select from a variety of voices with different tones and personalities.

- Support for multiple languages and accents: OpenAI's APIs support a wide array of languages, making it possible to build voice assistants for diverse global audiences. The support for accents further enhances accessibility.

- Customization options for voice tone and personality: Tailor the voice of your assistant to match your brand identity. Choose from various voices and adjust parameters to fine-tune the tone, creating a unique and engaging user experience.

- Examples of using Whisper and TTS APIs in a voice assistant workflow: The workflow is simple: the Whisper API converts speech to text, the NLU processes this text to determine the user's intent, and then the appropriate response is generated, which is finally converted to speech using a selected TTS engine.

Streamlined Dialogue Management and Contextual Awareness

Creating a natural and engaging conversation is a key challenge in voice assistant development. OpenAI’s tools provide sophisticated mechanisms for managing dialogues and maintaining context, allowing for fluid and responsive interactions.

- Techniques for maintaining conversation context: OpenAI's models can track the flow of conversation, remembering previous turns and relevant information. This ensures the voice assistant understands the context of user requests, providing more relevant and helpful responses.

- Handling user interruptions and corrections gracefully: The system gracefully handles interruptions and corrections, making the conversation more natural and less robotic. This improves the overall user experience.

- Managing multiple conversation turns effectively: OpenAI's tools allow the handling of multiple turns in a conversation, building a coherent and engaging dialogue experience.

- Integration with external data sources for enriched responses: Connect your voice assistant to external knowledge bases and APIs for accessing up-to-date information and providing more comprehensive answers.

- Examples of implementing dialogue management using OpenAI's tools: Implementing this requires strategic use of prompts that include the complete conversation history so the model maintains context.

Building Your First Voice Assistant: A Step-by-Step Guide

Building a basic voice assistant using OpenAI's tools is surprisingly straightforward. This high-level guide focuses on ease of use and provides a starting point for your project.

- Setting up the development environment: Choose your preferred programming language (Python is commonly used) and set up the necessary libraries and dependencies. OpenAI provides comprehensive documentation to guide you through this process.

- Integrating the necessary OpenAI APIs: Use OpenAI's client libraries to easily access and interact with the Whisper, GPT-4, and other relevant APIs.

- Coding a simple voice command handler: Write a simple script that uses the APIs to process speech input, understand user intent, and generate responses. Start with a few basic commands and gradually expand functionality.

- Testing and iterating the voice assistant: Test your assistant thoroughly, and iterate on the design based on your findings. OpenAI's APIs facilitate rapid experimentation and prototyping.

- Links to relevant documentation and tutorials: OpenAI provides extensive documentation and tutorials to guide you through the development process. You'll find plenty of resources to help you every step of the way.

Conclusion

OpenAI's 2024 developer tools are democratizing voice assistant development. By simplifying complex processes like NLU, speech-to-text, and dialogue management, OpenAI empowers developers of all skill levels to build innovative and user-friendly voice assistants. This technology drastically reduces development time and cost, opening doors for a new generation of voice-driven applications. Ready to create your own cutting-edge voice assistant? Start exploring OpenAI's powerful tools today and unlock the potential of voice interaction! Don't delay; start building your voice assistant with OpenAI's simplified tools now!

Featured Posts

-

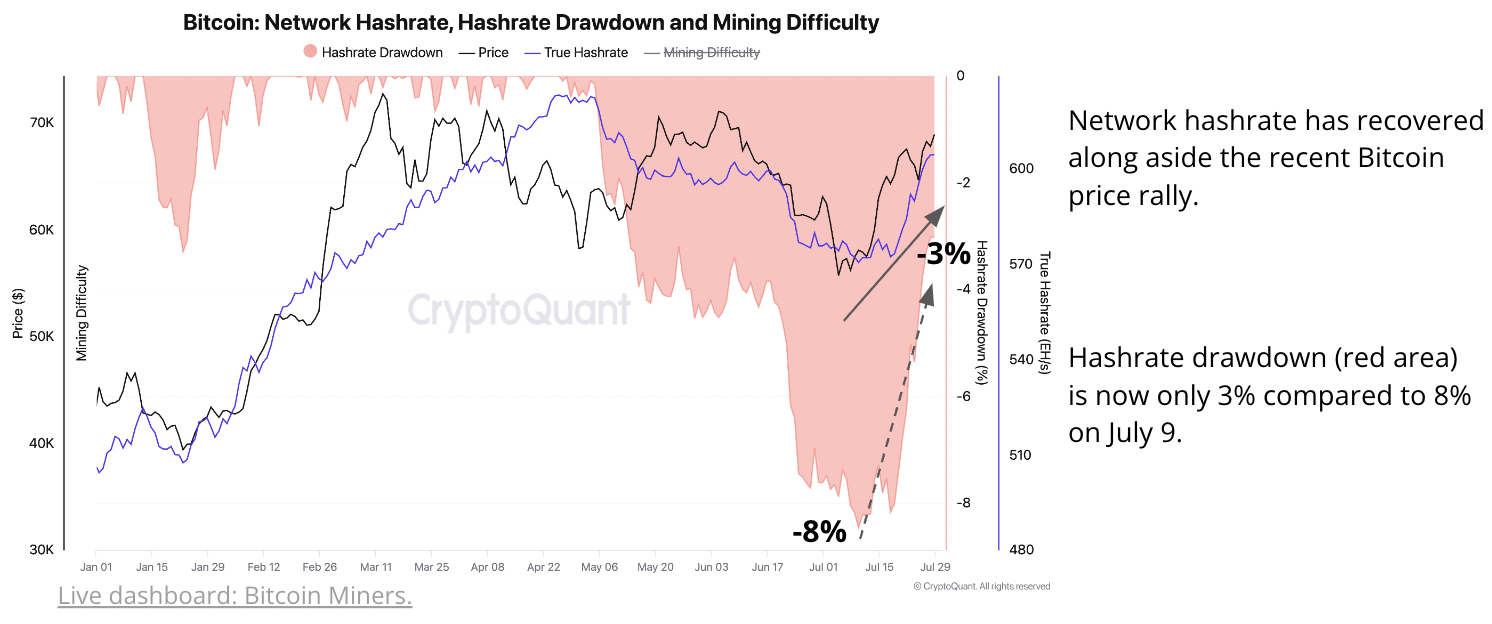

Bitcoin Mining Hashrate Soars A Detailed Analysis Of The Recent Spike

May 09, 2025

Bitcoin Mining Hashrate Soars A Detailed Analysis Of The Recent Spike

May 09, 2025 -

Cybercriminal Nets Millions Through Executive Office365 Account Breaches

May 09, 2025

Cybercriminal Nets Millions Through Executive Office365 Account Breaches

May 09, 2025 -

Investigation Launched After Child Rapist Found Near Massachusetts Daycare

May 09, 2025

Investigation Launched After Child Rapist Found Near Massachusetts Daycare

May 09, 2025 -

Nc Daycare Suspended State Action And Investigation Details

May 09, 2025

Nc Daycare Suspended State Action And Investigation Details

May 09, 2025 -

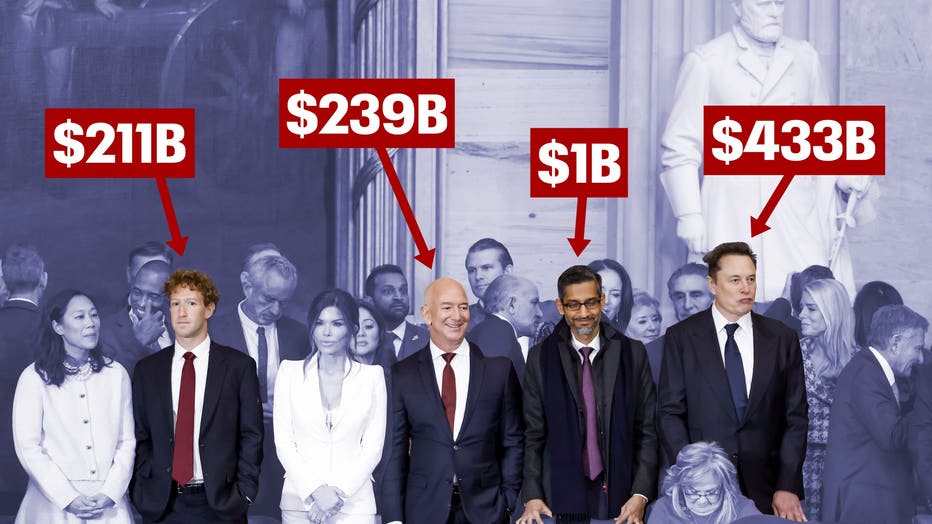

100 Days Of Losses How Trump Inauguration Donations Cost Tech Billionaires 194 Billion

May 09, 2025

100 Days Of Losses How Trump Inauguration Donations Cost Tech Billionaires 194 Billion

May 09, 2025