Mining Meaning From Messy Data: An AI's "Poop" Podcast Project

Table of Contents

This article will explore how AI tackles the challenges of extracting valuable insights from messy data, using the "Poop" podcast project as a compelling case study. We'll delve into the unique data source, the AI techniques employed for data cleaning and preprocessing, the insightful analysis performed, and the challenges overcome along the way.

The "Poop" Podcast Project: A Unique Data Source

The "Poop" podcast project, while bearing a humorous title, is a serious exploration of listener engagement and podcast effectiveness. The project's core involves analyzing a wide array of data generated around a fictional podcast focusing on (yes, you guessed it) a surprisingly popular topic: the world of… well, you get the picture. The data collected is far from pristine, presenting a fascinating challenge in messy data analysis.

- Types of data collected: The dataset is richly diverse and inherently messy. We collected audio transcripts, requiring extensive natural language processing, social media interactions (tweets, Facebook posts, comments), incorporating unstructured text data, and listener demographic information obtained through surveys, providing structured, but potentially incomplete, data.

- Challenges in data cleaning and preprocessing: Cleaning this data was no easy feat. The audio transcripts contained noise, inconsistencies in transcription, and numerous instances of missing data. Social media data presented additional complexities including slang, misspellings, and emotional outbursts.

- Why this data source is valuable despite its messiness: Despite its challenges, this diverse dataset provides a uniquely rich understanding of listener engagement. Analyzing this "messy" data allows for a more holistic and nuanced picture of audience response than simpler, cleaner datasets might offer. The project demonstrates the value of analyzing less-than-perfect data, which is actually the norm in the real world.

AI Techniques for Data Cleaning and Preprocessing

To tackle the messiness of our data, we employed a range of sophisticated AI techniques. Our approach focused on turning noisy, raw data into a structured, analyzable format – a crucial step in mining meaning from messy data.

- Natural Language Processing (NLP): NLP was crucial for analyzing the textual data. We used sentiment analysis to gauge listener emotional responses to specific podcast episodes, identifying positive, negative, or neutral opinions.

- Machine Learning Algorithms: Machine learning played a vital role in outlier detection and data imputation. This involved identifying and handling unusual data points (outliers) that might skew results, and filling in missing values (imputation) using predictive models. We used algorithms like TF-IDF for term frequency-inverse document frequency analysis and word2vec for creating word embeddings, improving the accuracy of our text analysis.

- Step-by-step data cleaning process:

- Noise reduction in audio transcripts using signal processing techniques.

- Standardization of social media data using lemmatization and stemming.

- Handling missing data points using k-Nearest Neighbors imputation.

- Outlier detection using Isolation Forest algorithm.

- Evaluation Metrics: We used precision, recall, and F1-score to evaluate the effectiveness of our data cleaning and preprocessing. These metrics helped us gauge the accuracy and reliability of the cleaned data before proceeding to the analysis phase.

Extracting Meaningful Insights from Cleaned Data

Once the data was cleaned and preprocessed, we moved to the exciting phase of extracting meaningful insights. This stage involved applying various analytical techniques to uncover patterns and relationships within the data. This is where the true value of mining meaning from messy data becomes apparent.

- Topic Modeling: We used Latent Dirichlet Allocation (LDA) for topic modeling to identify recurring themes and discussions in the podcast transcripts and social media interactions. This helped us understand the core topics resonating with the listeners.

- Sentiment Analysis: Sentiment analysis provided a quantitative measure of listener opinions and emotional responses towards different aspects of the podcast, identifying trends and pinpointing areas for improvement.

- Correlation Analysis: Correlation analysis helped us identify relationships between different data points, for example, correlating listener demographics with sentiment towards specific topics.

- Specific Insights: Our analysis revealed that humorous content related to [mention a specific humorous element related to the podcast's theme] generated the highest positive sentiment.

- Visualizations: We presented our findings using various visualizations including bar charts, word clouds, and network graphs, making complex data easily interpretable.

- Impact of Findings: These insights are already informing podcast strategy, helping to tailor content to resonate more effectively with the target audience.

Overcoming Challenges and Future Directions

The "Poop" podcast project wasn't without its challenges. Overcoming these hurdles is an integral part of mining meaning from messy data effectively.

- Challenges Encountered: Computational limitations posed a significant hurdle during processing the large dataset. Ethical considerations regarding data privacy and potential biases in the data also needed careful attention. Limitations of our AI techniques, such as imperfect sentiment analysis in informal language, required careful interpretation of the results.

- Future Research Directions: Future work will involve exploring advanced AI techniques like deep learning and transformer models for more accurate sentiment analysis and topic modeling. We also plan to integrate other data sources, such as listener feedback surveys, for a more comprehensive understanding. Addressing ethical considerations, such as anonymization and bias mitigation, will remain a priority.

- Specific Challenges and Solutions: We addressed computational limitations by employing cloud computing resources and optimizing our algorithms. We mitigated bias through careful data selection and validation processes.

- Suggestions for Future Improvement: Incorporating contextual information from the podcast itself into our analysis might improve the accuracy of our sentiment analysis. Exploring alternative imputation techniques for missing data is another area for potential refinement.

Conclusion: Unlocking the Power of Messy Data

The "Poop" podcast project successfully demonstrated the power of AI in mining meaning from messy data. By employing robust data cleaning and preprocessing techniques, coupled with insightful analytical methods, we extracted valuable insights into listener engagement and preferences. This project highlights the importance of meticulous data preparation for accurate analysis. These techniques are not limited to podcast analysis; they have broad applications across various domains, from market research to healthcare, and beyond.

The ability to effectively mine meaning from messy data is crucial in today's data-rich world. We encourage you to explore similar projects and utilize AI techniques to unlock the hidden potential within your own datasets. Numerous resources are available online, and further reading into NLP, machine learning, and data cleaning practices will empower you to extract meaningful insights from even the most chaotic data.

Featured Posts

-

Investigation Reveals Millions Stolen Through Compromised Office365 Accounts

Apr 24, 2025

Investigation Reveals Millions Stolen Through Compromised Office365 Accounts

Apr 24, 2025 -

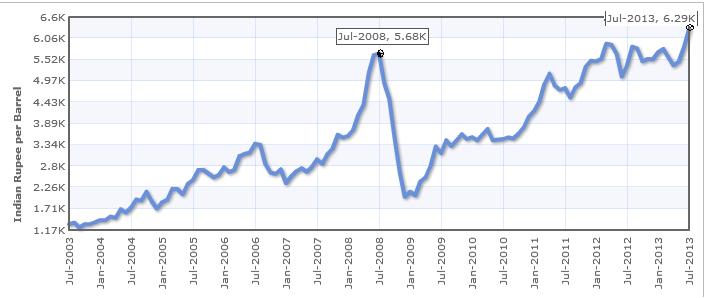

Crude Oil Market Report Prices And Analysis April 23 2024

Apr 24, 2025

Crude Oil Market Report Prices And Analysis April 23 2024

Apr 24, 2025 -

Us Tariffs Drive Chinas Lpg Imports Towards The Middle East

Apr 24, 2025

Us Tariffs Drive Chinas Lpg Imports Towards The Middle East

Apr 24, 2025 -

Facebooks Trajectory Zuckerbergs Leadership In A Changing Political Landscape

Apr 24, 2025

Facebooks Trajectory Zuckerbergs Leadership In A Changing Political Landscape

Apr 24, 2025 -

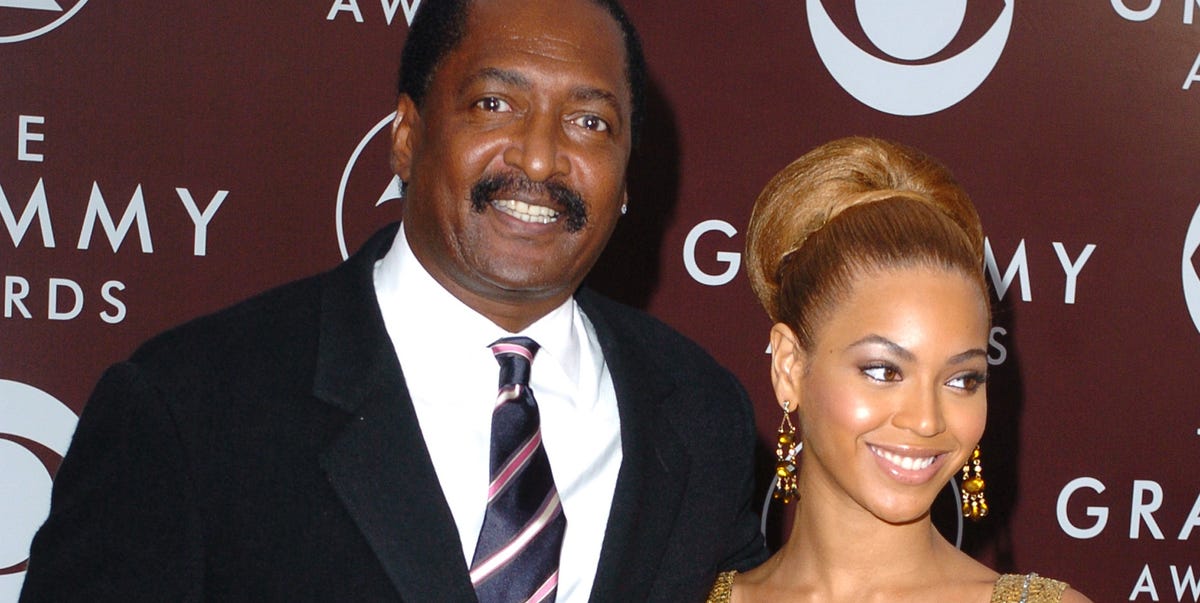

Tina Knowles Breast Cancer Diagnosis The Importance Of Mammograms

Apr 24, 2025

Tina Knowles Breast Cancer Diagnosis The Importance Of Mammograms

Apr 24, 2025