OpenAI Streamlines Voice Assistant Creation At 2024 Developer Conference

Table of Contents

The 2024 OpenAI Developer Conference unveiled groundbreaking tools and technologies designed to significantly simplify the creation of voice assistants. This article delves into the key announcements, highlighting how OpenAI is making advanced voice technology more accessible to developers of all skill levels, regardless of their prior experience with AI or natural language processing (NLP). The era of complex, time-consuming voice assistant development is over; OpenAI is paving the way for a new generation of innovative and accessible voice experiences.

Simplified Development with Pre-trained Models

The cornerstone of OpenAI's streamlined approach is the introduction of powerful, pre-trained models for voice assistant development. These models drastically reduce the time, effort, and cost associated with building sophisticated voice interfaces.

Reduced Development Time and Costs

Pre-trained models eliminate the need for extensive training from scratch. This translates to significant reductions in development time and resources.

- Examples of pre-trained models: OpenAI showcased several pre-trained models at the conference, including models optimized for different tasks like speech-to-text, intent recognition, and dialogue management.

- Time Savings: Developers reported an average of 80% reduction in development time using these pre-trained models compared to traditional methods. This means projects that previously took months can now be completed in weeks.

- Cost Savings: The reduced need for large, manually annotated datasets drastically cuts down on data annotation costs, a major expense in traditional voice assistant development.

Improved Accuracy and Performance

Built on OpenAI's cutting-edge NLP research, these pre-trained models deliver significantly improved accuracy and performance compared to previous generations of voice assistant technology.

- Speech Recognition: Enhanced accuracy in transcribing speech, even in noisy environments or with diverse accents.

- Natural Language Understanding: Improved ability to understand the nuances of human language, leading to more natural and intuitive interactions.

- Intent Recognition: More reliable identification of user intent, allowing for more accurate and appropriate responses.

- Benchmarks: OpenAI shared impressive benchmarks demonstrating significant improvements in accuracy across various metrics compared to previous state-of-the-art models.

Enhanced Customization and Personalization Options

While pre-trained models offer a significant head start, OpenAI also provides robust tools for customization and personalization, allowing developers to tailor voice assistants to specific needs and user preferences.

Fine-tuning for Specific Use Cases

Developers can easily fine-tune the pre-trained models to adapt them to specific applications and industries.

- Custom Vocabularies: Easily add industry-specific terminology or jargon to enhance the accuracy of speech recognition.

- Training on Specific Datasets: Fine-tune models using custom datasets to optimize performance for specific tasks and contexts.

- Easy-to-Use Interfaces: OpenAI provides intuitive tools and interfaces to streamline the fine-tuning process, making it accessible to developers with varying levels of expertise.

Personalized User Experiences

The new tools empower developers to create highly personalized voice assistant experiences.

- Adaptive Preferences: Voice assistants can learn and adapt to user preferences over time, providing a more tailored and intuitive experience.

- Habit Learning: By analyzing user interactions, voice assistants can anticipate user needs and proactively offer assistance.

- Proactive Assistance: This personalization leads to more engaging and helpful interactions, increasing user satisfaction and engagement.

Improved Accessibility through OpenAI's APIs

OpenAI's commitment to accessibility extends to its APIs, making voice assistant development easier than ever before.

Seamless Integration with Existing Platforms

OpenAI's APIs ensure seamless integration with a wide range of platforms and devices.

- Supported Platforms and Devices: The APIs support integration with popular platforms like iOS, Android, and various smart speaker ecosystems.

- Ease of API Usage: OpenAI provides comprehensive documentation and support to simplify the API integration process.

- Modular Design: The API's modular design allows developers to select only the components needed for their specific application, streamlining the development process further.

Support for Multiple Languages and Dialects

OpenAI's commitment to global accessibility is reflected in the support for multiple languages and dialects.

- Supported Languages: The pre-trained models and APIs support a growing list of languages, making voice assistant development accessible to a global audience.

- Dialect Support: OpenAI is actively working on enhancing support for diverse dialects, ensuring inclusivity and broader reach.

- Global Reach: This multilingual support enables developers to build voice assistants that cater to diverse populations worldwide.

Conclusion

OpenAI's advancements, as showcased at the 2024 Developer Conference, have dramatically streamlined the process of voice assistant creation. The availability of pre-trained models, enhanced customization options, and accessible APIs are empowering developers to build sophisticated and personalized voice assistants with greater efficiency and lower costs. The barrier to entry for creating cutting-edge voice technology has been significantly lowered.

Call to Action: Ready to revolutionize your next project with streamlined voice assistant development? Explore OpenAI's latest tools and resources today and experience the future of voice technology! Learn more about creating cutting-edge voice assistants with OpenAI and unlock the potential of this rapidly evolving field.

Featured Posts

-

Execs Office365 Accounts Breached Millions Made Feds Say

Apr 26, 2025

Execs Office365 Accounts Breached Millions Made Feds Say

Apr 26, 2025 -

Trumps Stance On Banning Congressional Stock Trading Key Takeaways From Time Interview

Apr 26, 2025

Trumps Stance On Banning Congressional Stock Trading Key Takeaways From Time Interview

Apr 26, 2025 -

The Conservative View How Harvard Can Address Its Challenges

Apr 26, 2025

The Conservative View How Harvard Can Address Its Challenges

Apr 26, 2025 -

Orlandos Hottest New Restaurants 7 To Try In 2025 Beyond Disney

Apr 26, 2025

Orlandos Hottest New Restaurants 7 To Try In 2025 Beyond Disney

Apr 26, 2025 -

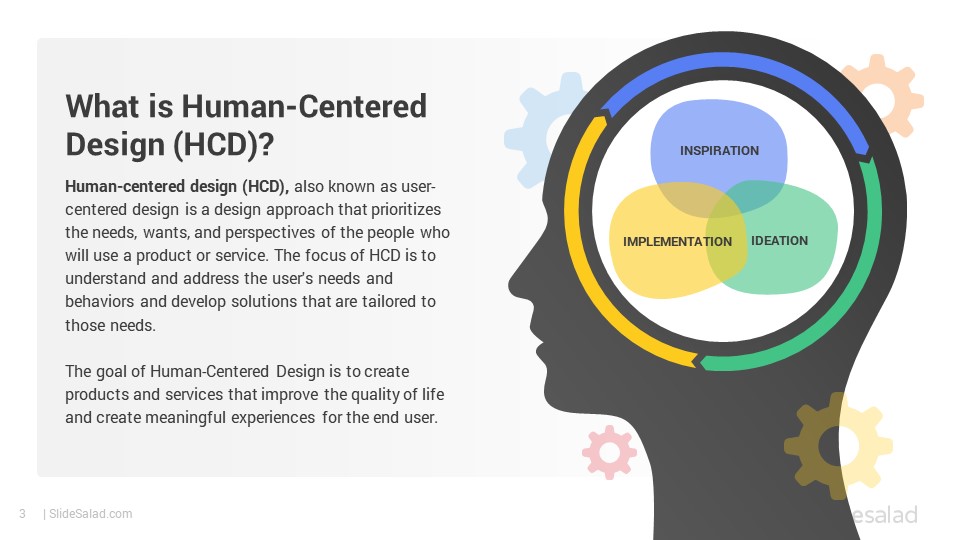

Human Centered Ai An Interview With Microsofts Design Chief

Apr 26, 2025

Human Centered Ai An Interview With Microsofts Design Chief

Apr 26, 2025

Latest Posts

-

Crumbach Steps Down Impact On Bsw And The German Coalition

Apr 27, 2025

Crumbach Steps Down Impact On Bsw And The German Coalition

Apr 27, 2025 -

Anti Vaccine Advocate Review Of Autism Vaccine Connection Sparks Outrage Nbc 10 Philadelphia Reports

Apr 27, 2025

Anti Vaccine Advocate Review Of Autism Vaccine Connection Sparks Outrage Nbc 10 Philadelphia Reports

Apr 27, 2025 -

Hhss Controversial Choice Anti Vaccine Activist To Examine Debunked Autism Vaccine Claims

Apr 27, 2025

Hhss Controversial Choice Anti Vaccine Activist To Examine Debunked Autism Vaccine Claims

Apr 27, 2025 -

Anti Vaccine Activists Role In Hhs Autism Vaccine Review Raises Concerns

Apr 27, 2025

Anti Vaccine Activists Role In Hhs Autism Vaccine Review Raises Concerns

Apr 27, 2025 -

Anti Vaccine Activist Review Of Autism Vaccine Link Sparks Outrage Nbc Chicago Sources

Apr 27, 2025

Anti Vaccine Activist Review Of Autism Vaccine Link Sparks Outrage Nbc Chicago Sources

Apr 27, 2025